👋 Hi, this is Gergely with a subscriber-only issue of the Pragmatic Engineer Newsletter. In every issue, I cover challenges at Big Tech and startups through the lens of engineering managers and senior engineers. If you’ve been forwarded this email, you can subscribe here. What’s Changed in 50 Years of Computing: Part 3How has the industry changed 50 years after the ‘The Mythical Man-Month’ was published? A look into estimations, developer productivity and prototyping approaches evolving.‘The Mythical Man-Month’ by Frederick P. Brooks was published in 1975 – almost 50 years ago; and the book still bears an influence: tech professionals quote it today, like “Brooks’ Law;” the observation that adding manpower to a late software project makes it more late. When Brooks wrote Mythical Man-Month, he was project manager of the IBM System/360 operating system, one of the most complex software projects in the world at the time. The book collates his experience of building large and complex software during the 1970s, and some best practices which worked well. I’ve been working through this book written near the dawn of software to see which predictions it gets right or wrong, what’s different about engineering today – and what stays the same. In Part 1 of this series, we covered chapter 1-3, and chapters 4-7 in Part 2. Today, it’s chapters 8, 9, and 11, covering:

The bottom of this article could be cut off in some email clients. Read the full article uninterrupted, online. 1. EstimationChapter 8 is “Calling the shot,” about working out how long a piece of software takes to build. It’s always tempting to estimate how long the coding part of the work should take, multiply that by a number (like 2 or 3), and get the roughly correct estimate. However, Brooks argues this approach doesn’t work, based on his observation of how developers spent time in the ‘1970s. He said it was more like this:

Assuming this is the case, should one not “just” multiply the coding estimate by six? No! Errors in the coding estimate lead to absurd estimates, and it assumes you can estimate the coding effort upfront, which is rare. Instead, Brooks shares an interesting anecdote: “Each job takes about twice as long as estimated.” This is an anecdote shared with Brooks by Charles Portman, manager of a software division in Manchester, UK. Like today, delays come from meetings, higher-priority but unrelated work, paperwork, time off for illness, machine downtime, etc. Estimates don’t take these factors into account, making them overly optimistic in how much time a programmer really has. This all mostly holds true today; specifically that it still takes about twice as long to complete something as estimated, at least at larger companies. The rule of thumb to multiply estimates by two, to account for meetings, new priorities, holidays/sickness, etc, is still relevant. The only factor Brooks mentions in his 1975 book that’s no longer an issue is machine availability. 2. Developer productivityBrooks then moves to the question of how many instructions/words it’s reasonable to expect a programmer to produce, annually. This exploration becomes a bid to quantify developer productivity by instructions typed, or lines of code produced. Developer productivity in the 1970sLike many managers and founders today, Brooks wanted to get a sense of how productive software developers are. He found pieces of data from four different studies, and concluded that average developer output in the 1970s was: 600-5,000 program words per year, per programmer. Brooks collected data from four data sources on programming productivity. High-level languages make developers much more productive. Brooks cites a report from Corbató of MIT’s Project MAC reports, concluding:

Brooks also shared another interesting observation: “Normal” programs > compilers > operating systems for effort. Brooks noticed that compiler and operating systems programmers produce far fewer “words per year” than those building applications (called “batch application programs.”) His take:

Developer productivity todaySo, how has developer productivity changed in 50 years? Today, we’re more certain that high-level programming languages are a productivity boost. Most languages we use these days are high-level, like Java, Go, Ruby, C#, Python, PHP, Javascript, Typescript, and other object-oriented, memory-safe languages. Low-level languages like C, C++ and Assembly, are used in areas where high performance is critical, like games, low-latency use cases, hardware programming, and more niche use cases. The fact that we use high-level languages is testament to their productivity boosts. Studies in the years since have confirmed the productivity gains Brooks observed. A paper entitled Do programming languages affect productivity? A case study using data from open source projects investigated it:

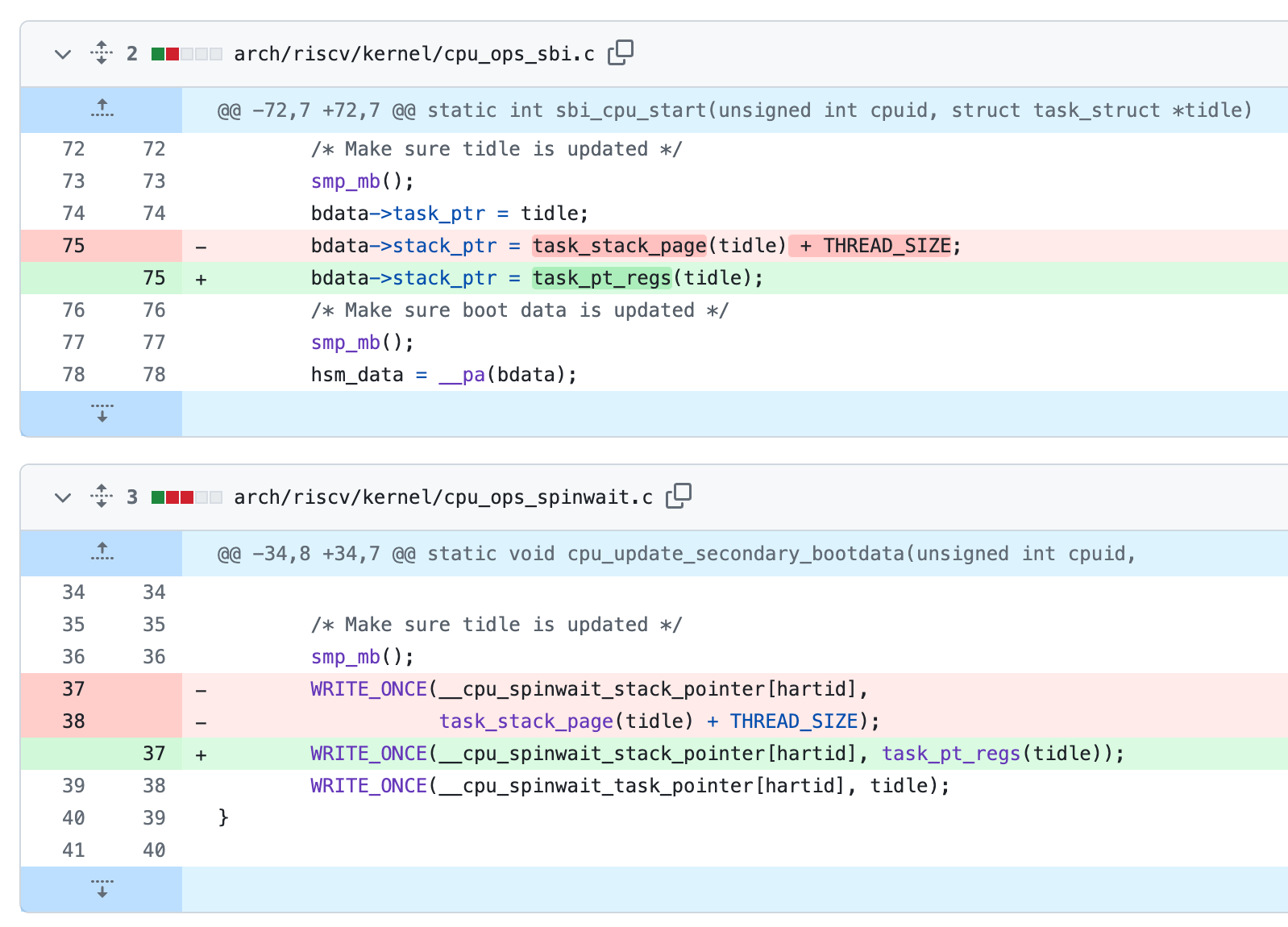

The study looked at nearly 10,000 open source projects, the number of lines developers committed, and whether the language was high-level or low-level. They found that high-level languages resulted in more lines of code committed per developer. Assuming that lines of code correlate with productivity, it means high-level languages are more productive. But we know lines of code are not particularly telling in themselves. However, if you’ve worked with low and high-level languages, you’ll know high-level languages are easier to read and write, and they offer an additional layer of abstraction for things like memory management, hardware interaction, and error handling. They require less onboarding and expertise to write, and are generally harder to make errors with. So it’s little surprise that unless there’s a strong reason to go low-level, most developers choose high-level languages. It’s interesting that languages offering the performance benefits of low-level languages with the clean syntax of high-level languages seem to be getting more popular; a good example of which is Rust. OS or kernel development is still much slower than application development today. Brooks’ observation that operating system and compiler developers made far fewer code changes annually than application developers – despite also working fulltime as programmers – also remains true. The more critical or widely-used a system is, the more care is needed when making changes. The Linux kernel illustrates just how small many changes are; many are only a few lines: here’s a 4-line change to switch to new Intel CPU model defines, or a five-line change fixing a threading bug:

It’s worth noting there are often no unit tests or other forms of automated tests in key systems like operating system kernels, due to the low-level software. This means changes to the kernel take much more time and effort to verify. Behind every line change, there’s almost always more deliberation, experimentation, and thought. Lines of code output-per-developer definitely feels like it has increased since the 1970s. It’s amusing to read that the average programmer wrote around 40-400 “instructions” per month, back then. Of course, it’s worth keeping in mind that most of the code was in lower level languages, and some of it applied to operating systems’ development. Checking GitHub data for some more popular open source projects, I found:

These figures suggest it’s fair to say developers today produce more code. My sense is that this change is due to using higher-level languages, modern tools like automated testing (tests also count as lines of code,) and more safety nets being in place that enable faster iteration. Of course, coupling business value to lines of code remains largely futile. We’re seeing developers being able to “output” and interpret more lines of code than before, but it’s pretty clear that there’s a limit on how much code is too much. This is why mid-size and larger companies push for small pull requests that are easier to review, and make potential bugs or issues easier to catch. We know far more about developer productivity these days, but haven’t cracked accurate measurement. The best data point Brooks could get his hands on for developer productivity was lines of code and numbers of words typed by a programmer. We know that looking at only this data point is useless, as it’s possible for developers to generate unlimited quantities of code while providing zero business value. Also, generating large amounts of code is today even easier with AI coding tools, making this data point still more irrelevant. This publication has explored the slippery topic of developer productivity from several angles:

Ever more data suggests that to measure developer productivity, several metrics in combination are needed; including qualitative ones, not only quantitative metrics that are easily translatable into figures. Qualitative metrics include asking developers how productive they feel, and what slows them down. Building a productive software engineering team is tricky; it takes competent software engineers, hands-on (or at least technical-enough) managers, a culture that’s about more than firefighting, and adjusting approaches to the needs of the business and teams. After all, there’s little point in having an incredibly productive engineering team at a startup with no viable business model. No amount of excellent software will solve this core problem! We previously covered how to stay technical as an engineering manager or tech lead, and also how to stay hands-on. 3. The vanishing art of program size optimization...Subscribe to The Pragmatic Engineer to unlock the rest.Become a paying subscriber of The Pragmatic Engineer to get access to this post and other subscriber-only content. A subscription gets you:

|

Search thousands of free JavaScript snippets that you can quickly copy and paste into your web pages. Get free JavaScript tutorials, references, code, menus, calendars, popup windows, games, and much more.

What’s Changed in 50 Years of Computing: Part 3

Subscribe to:

Post Comments (Atom)

I Quit AeroMedLab

Watch now (2 mins) | Today is my last day at AeroMedLab ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ...

-

code.gs // 1. Enter sheet name where data is to be written below var SHEET_NAME = "Sheet1" ; // 2. Run > setup // // 3....

No comments:

Post a Comment