👋 Hi, this is Gergely with a subscriber-only issue of the Pragmatic Engineer Newsletter. In every issue, I cover challenges at Big Tech and startups through the lens of engineering managers and senior engineers. If you’ve been forwarded this email, you can subscribe here. A startup on hard mode: Oxide, Part 2. Software & CultureOxide is a hardware and a software startup, assembling hardware for their Cloud Computer, and building the software stack from the ground up. A deep dive into the company’s tech stack & culture.Before we start: we are running research on bug management and “keep the lights on” (KTLO.) This is an area many engineering teams struggle with, and we’d love to hear what works for you, and your organization. You can share details here with us – with Gergely and Elin, that is. Thank you! Hardware companies are usually considered startups on “hard mode” because hardware needs more capital and has lower margins than software, and this challenge is shown by the fact there are far fewer hardware startup success stories than software ones. And Oxide is not only building novel hardware – a new type of server named “the cloud computer” – but it’s also producing the software stack from scratch. I visited the company’s headquarters in Emeryville (a few minutes by car across the Bay Bridge from San Francisco) to learn more about how Oxide operates, with cofounder and CTO Bryan Cantrill. In Part 1 of this mini-series, we covered the hardware side of the business; building a networking switch, using “proto boards” to iterate quickly on hardware, the hardware manufacturing process, and related topics. Today, we wrap up with:

As always, these deep dives into tech companies are fully independent, and I have no commercial affiliation with them. I choose businesses to cover based on interest from readers and software professionals, and also when it’s an interesting company or domain. If you have suggestions for interesting tech businesses to cover in the future, please share! The bottom of this article could be cut off in some email clients. Read the full article uninterrupted, online. 1. Evolution of “state-of-the-art” server-side computingIn Part 1, we looked at why Oxide is building a new type of server, and why now in 2024? After all, building and selling a large, relatively expensive cloud computer as big as a server rack seems a bit of a throwback to the bygone mainframe computing era. The question is a good opportunity to look at how servers have evolved over 70 years. In a 2020 talk at Stanford University, Bryan gave an interesting overview. Excerpts below: 1961: IBM 709. This machine was one of the first to qualify as a “mainframe,” as it was large enough to run time-shared computing. It was a vacuum tube computer, weighed 33,000 pounds (15 tonnes,) and occupied 1,900 square feet (180 sqm,) consuming 205 KW. Today, a full rack consumes around 10-25 KW. Add to this the required air conditioning, which was an additional 50% in weight, space and energy usage!

1975: PDP 11-70. Machines were getting smaller and more powerful.

1999: Sun E10K. Many websites used Sun servers in the late 1990s, when the E10K looked state-of-the-art. eBay famously started off with a 2-processor Sun machine, eventually using a 64-processor, 64GB Sun E10K version to operate the website.

2009: x86 machines. In a decade, Intel x86, Intel’s processor family won the server battle with value for money; offering the same amount of compute for a fraction of the price of vendors like Sun. Around 2009, HP’s DL380 was a common choice. Initially, x86 servers had display ports CD-ROM drives, which was odd on a server. The reason was that it was architecturally a personal computer, despite being rack-mounted. They were popular for the standout price-for-performance of the x86 processor. 2009: hyperscale computing begins at Google. Tech giants believed they could have better servers by custom-building their own server architecture from scratch, instead of using what was effectively a PC.

Google aimed to build the cheapest-possible server for its needs, and optimized all parts of the early design for this. This server got rid of unneeded things like the CD-drive and several ports, leaving a motherboard, CPUs, memory, hard drives, and a power unit. Google kept iterating on the design. 2017: hyperscale computing accelerates. It wasn’t just Google that found vendors on the market didn’t cater for increasingly large computing needs. Other large tech companies decided to design their own servers for their data centers, including Facebook:

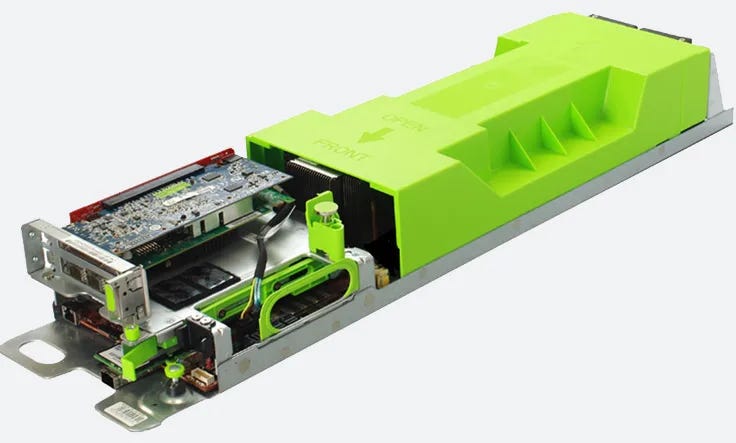

By then, hyperscale compute had evolved into compute sleds with no integrated power supply. Instead, they plugged into a DC bus bar. Most hyperscalers realized that optimizing power consumption was crucial for building efficient, large-scale compute. Bryan says:

2020: server-side computing still resembles 2009. As hyperscale computing went through a major evolution in a decade, a popular server in 2020 was the HPE DL560: It remains a PC design and ships with a DVD drive and display ports. Bryan’s observation is that most companies lack the “infrastructure privilege” to use their custom-built solutions, unlike hyperscalers such as Google and Meta which greatly innovated in server-side efficiency. Why has there been no innovation in modernizing the server, so that companies can buy an improved server for large-scale use cases? Bryan says:

2. Software stackRust is Oxide’s language of choice for the operating system and backend services. Software engineer Steve Klabnik was previously on Rust’s core team, and joined Oxide as one of the first software engineers. On the DevTools.fm podcast, he outlined reasons why a developer would choose Rust for systems programming, over C or C++:

Interestingly, going all-in on Rust greatly helped with hiring, Bryan reveals; probably as a combination of the Rust community being relatively small, and Oxide being open about its commitment to it. Initially, it was more challenging to find qualified hardware engineers than software engineers; perhaps because software engineers into Rust heard about Oxide. Open source is a deliberate strategy for Oxide’s builds and software releases, and another differentiator from other hardware vendors who ship custom hardware with custom, closed source software. The embedded operating system running on the microcontrollers in Oxide’s hardware is called Hubris. (Note that this is not the operating system running on the AMD CPUs: that operating system is Helios, as discussed below.) Hubris is all-Rust and open source. Characteristics:

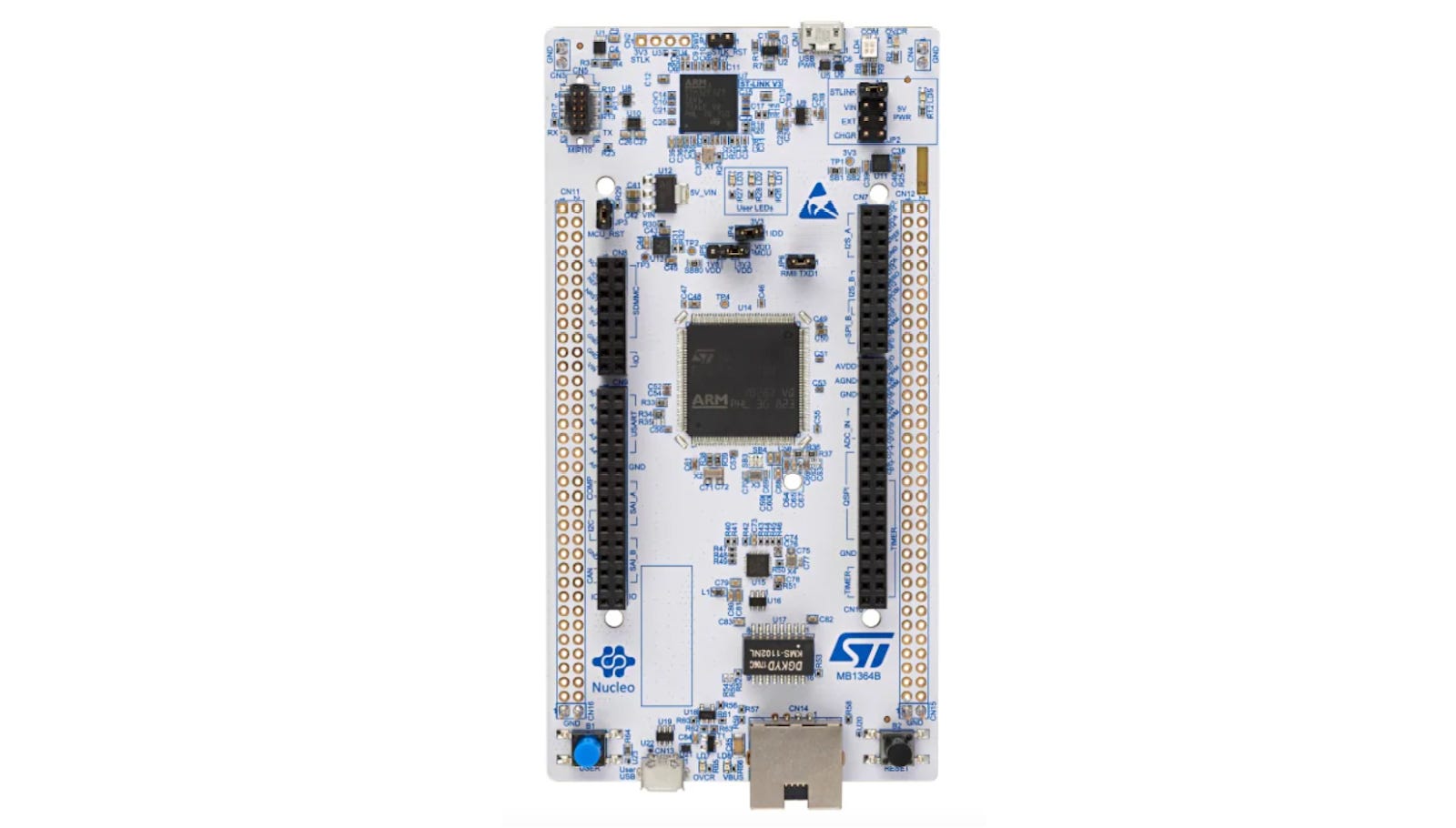

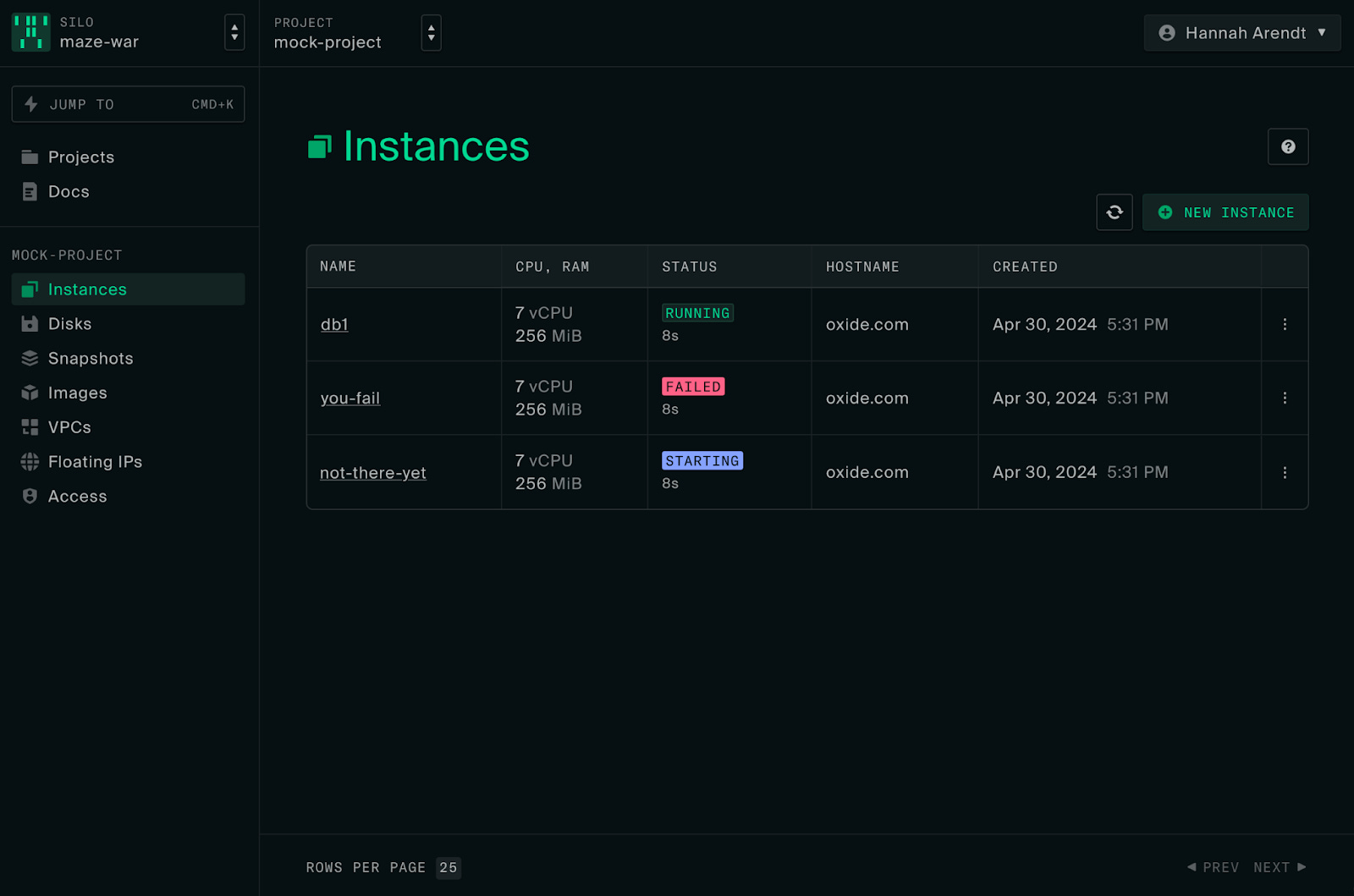

If you want to run Hubris on actual hardware and debug it with Humility, you can by ordering a board that costs around $30: the ST Nucleo-H753ZI evaluation board is suitable: The hypervisor. A hypervisor is important software in cloud computing. Also known as a “Virtual Machine Monitor (VMM),” the hypervisor creates and runs virtual machines on top of physical machines. Server hardware is usually powerful enough to warrant dividing one physical server into multiple virtual machines, or at least being able to do this. Oxide uses a hypervisor solution built on the open source bhyve, which is itself built into illumos, a Unix operating system. Oxide maintains its own illumos distribution called Helios and builds its own, Rust-based VMM userspace, called Propolis. Oxide shares more about the hypervisor’s capabilities in online documentation. Oxide has also open sourced many other pieces of software purpose-built for their own stack, or neat tools:

Other technologies Oxide uses:

3. Compensation & benefitsOxide chose a radically different compensation approach from most companies, with almost everyone earning an identical base salary of $201,227. The only exception is some salespeople on a lower base salary, but with commission. How did this unusual setup emerge? Bryan shares that the founders brainstormed to find an equitable compensation approach which worked across different geographies. Ultimately, it came down to simplicity, he says:

This unusual approach supports company values:

The company updates the base salary annually to track inflation: in 2024, everyone makes $201,227. Bryan acknowledged this model may not scale if Oxide employs large numbers of people in the future, but he hopes the spirit of this comp approach would remain. Other benefits. Oxide offers benefits on top of salary – mostly health insurance; very important in the US:

In another example of transparency, the policy documentation for these benefits was made public in 2022 in a blog post by systems engineer, iliana etaoin. 4. Heavyweight hiring processOxide’s hiring process is unlike anything I’ve seen, and we discussed it with the team during a podcast recording at their office... Subscribe to The Pragmatic Engineer to unlock the rest.Become a paying subscriber of The Pragmatic Engineer to get access to this post and other subscriber-only content. A subscription gets you:

|

Search thousands of free JavaScript snippets that you can quickly copy and paste into your web pages. Get free JavaScript tutorials, references, code, menus, calendars, popup windows, games, and much more.

A startup on hard mode: Oxide, Part 2. Software & Culture

Subscribe to:

Post Comments (Atom)

I Quit AeroMedLab

Watch now (2 mins) | Today is my last day at AeroMedLab ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ...

-

code.gs // 1. Enter sheet name where data is to be written below var SHEET_NAME = "Sheet1" ; // 2. Run > setup // // 3....

No comments:

Post a Comment