👋 Hi, this is Gergely with a subscriber-only issue of the Pragmatic Engineer Newsletter. In every issue, I cover challenges at Big Tech and startups through the lens of engineering managers and senior engineers. If you’ve been forwarded this email, you can subscribe here. How do AI software engineering agents work?Coding agents are the latest promising Artificial Intelligence (AI) tool, and an impressive step up from LLMs. This article is a deep dive into them, with the creators of SWE-bench and SWE-agent.In March, Cognition Labs grabbed software engineers’ attention by announcing “Devin,” what it called the “world’s first AI software engineer,” with the message that it set a new standard as a SWE-bench coding benchmark. As of today, Devin is closed source and in private beta, so we don’t know how it works, and most people cannot access it. Luckily for us, the team behind the SWE-bench benchmark has open sourced an AI agent-based “coding assistant” that performs comparably on this benchmark as Devin did. Their solution is SWE-agent, which solution solves 12.5% of the tickets in this benchmark correctly, fully autonomously (this is about 4x of what the best LLM-only model performed at.) SWE-agent was built in 6 months by a team of 7 people at Princeton University, in the US. The team also publishes research papers about their learnings, alongside it being open source. In today’s issue, we talk with Ofir Press, a postdoctoral research fellow at Princeton, and former visiting researcher at Meta AI and MosaicML. He’s also one of SWE-agent’s developers. In this article, we cover:

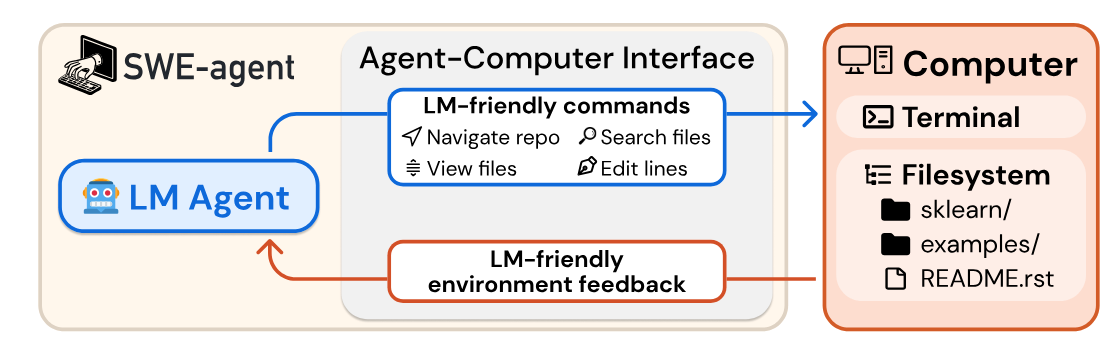

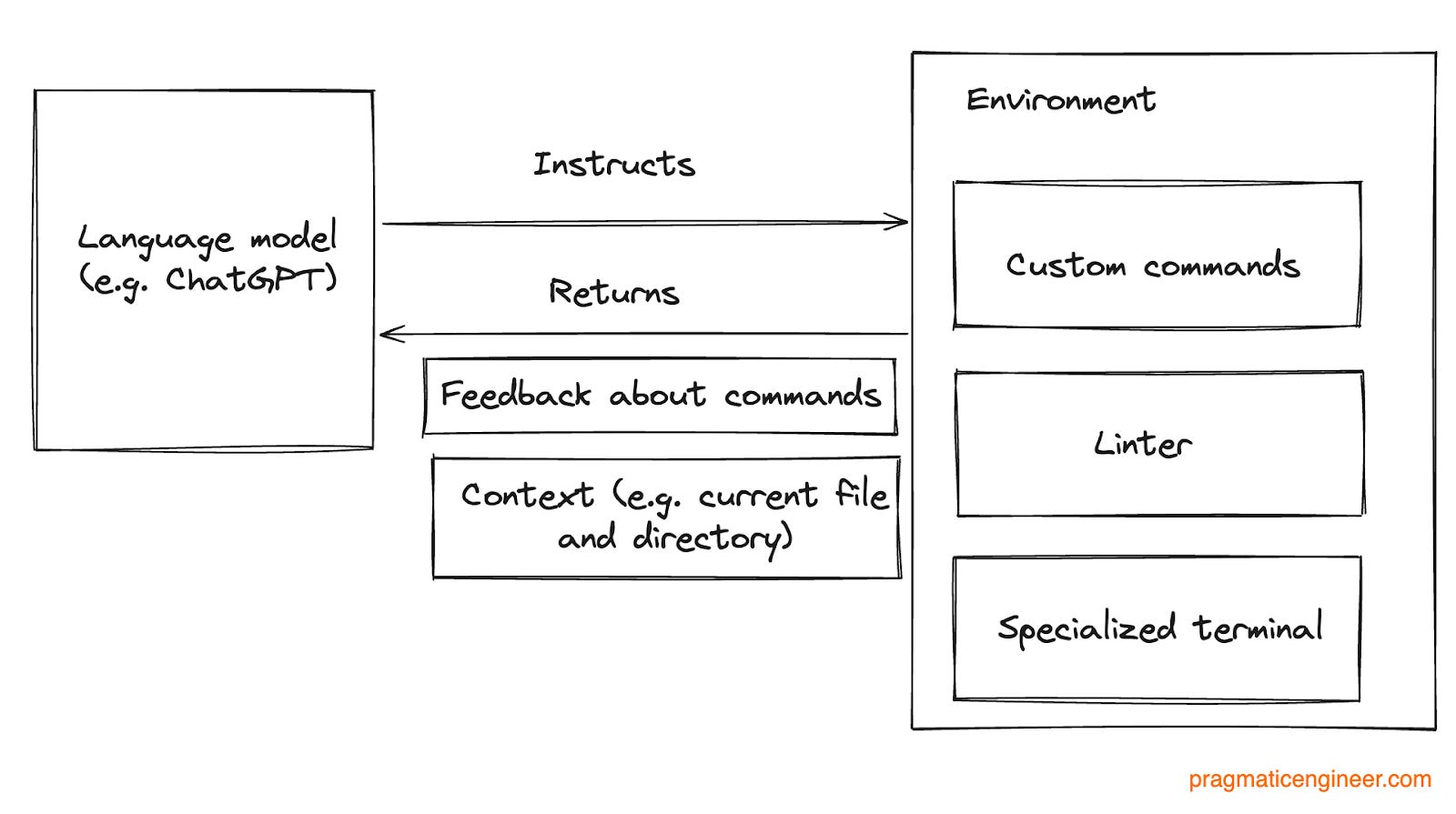

Before starting, a word of appreciation to the Princeton team for building SWE-bench, already an industry-standard AI coding assessment evaluation toolset, and for releasing their industry-leading AI coding tool, SWE-agent, as open source. Also, thanks for publishing a paper on SWE-agent and ACI interfaces. Also, a shout out to everyone building these tools in the open; several are listed at the end of section 2, “How does SWE-agent work?” The bottom of this article could be cut off in some email clients. Read the full article uninterrupted, online. 1. The Agent-Computer-InterfaceSWE-agent is a tool that takes a GitHub issue as input, and returns a pull request as output, which is the proposed solution. SWE-agent currently uses GPT-4-Turbo under the hood, through API calls. As the solution is open source, it’s easy enough to change the large language model (LLM) used by the solution to another API, or even a local model; like how the Cody coding assistant by Sourcegraph can be configured to use different LLMs. Agent-Computer Interface (ACI) is an interface for large language models (LLMs) like ChatGPT to work in an LLM-friendly environment. The team took inspiration from human-computer interaction (HCI) studies, where humans “communicate” with computers via interfaces that make sense, like a keyboard. In turn, computers communicate “back” via interfaces which humans can understand, like a computer screen. The AI agent also uses a similar type of interface when it communicates with a computer:

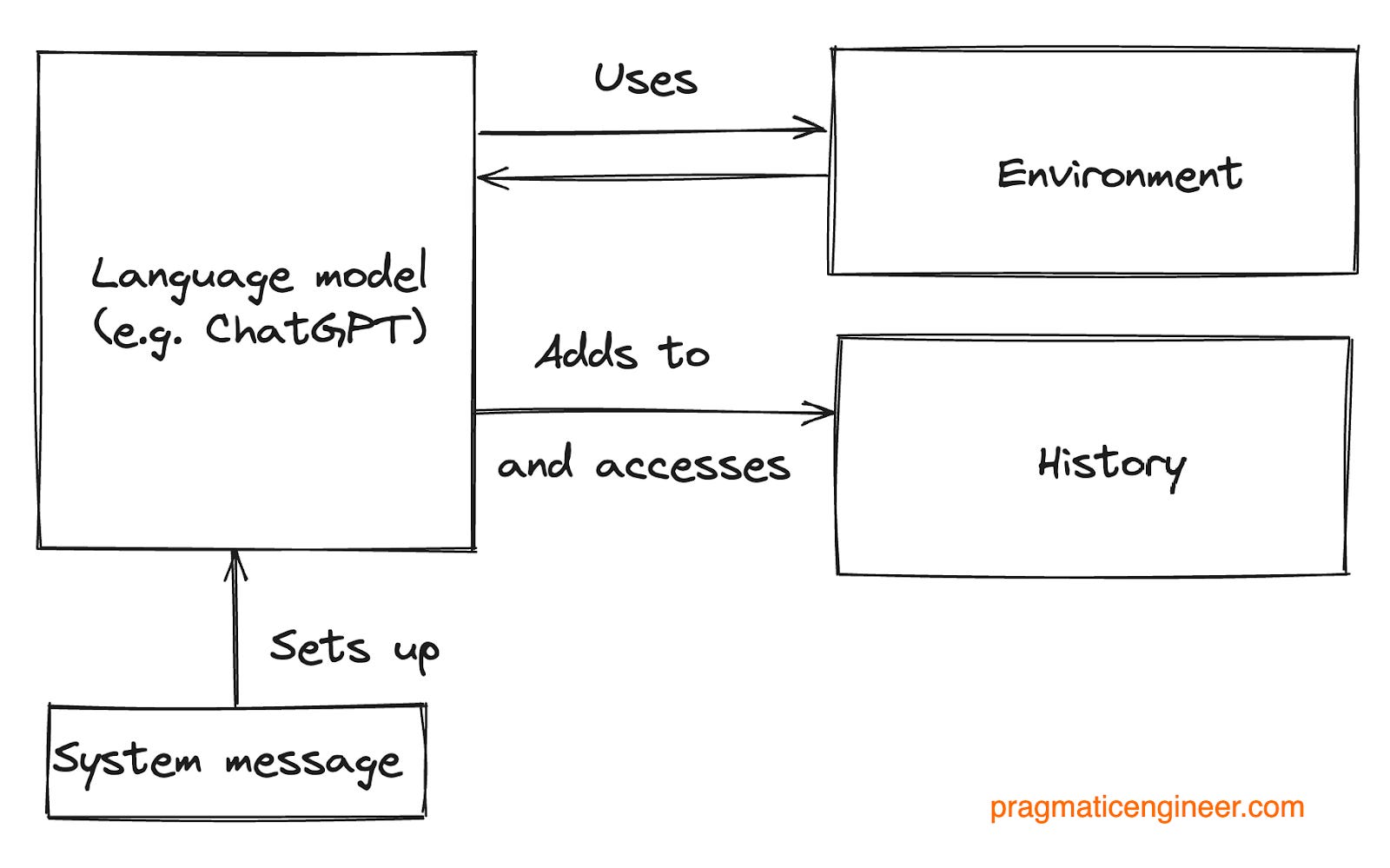

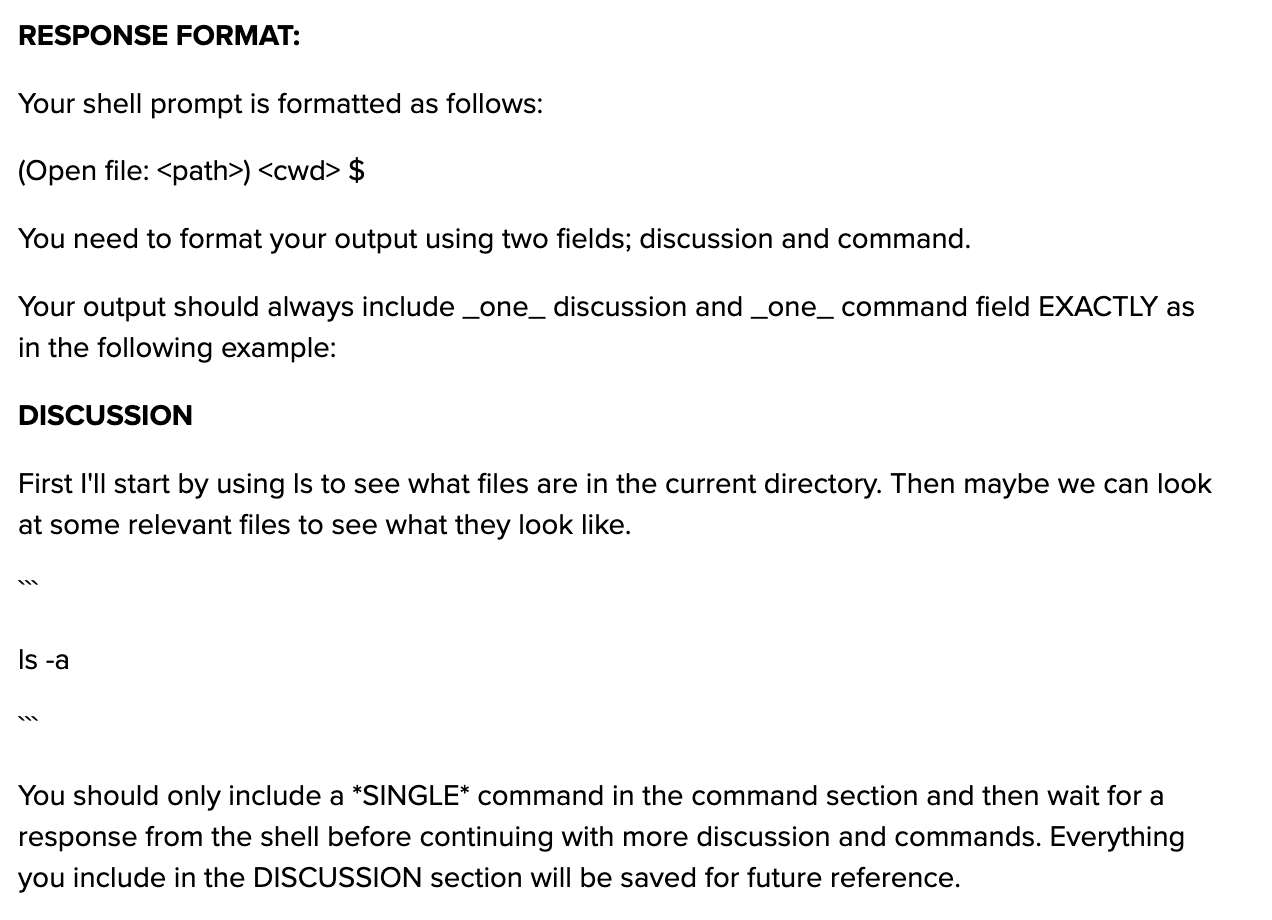

Let’s go through how the ACI works from semantic and structural points of view. ACI from the LLM point of viewA good way to conceive of an agent is as a pre-configured LLM session with specialized tooling:

The full system message is about 800 words long, and includes all system commands the LLM can execute.

ACI from a software component point of viewThe ACI consists of these “software building blocks”:

Configuring the ACI is easy enough in the case of SWE-bench:

Guiding the ACI with instructionsBefore executing commands, additional tips are given to the SWE-agent ACI. These are likely scenarios in which the agent struggled in the past, and the tips to compensate for this. Here are some examples of tips, from SWE-agent’s additional prompts:

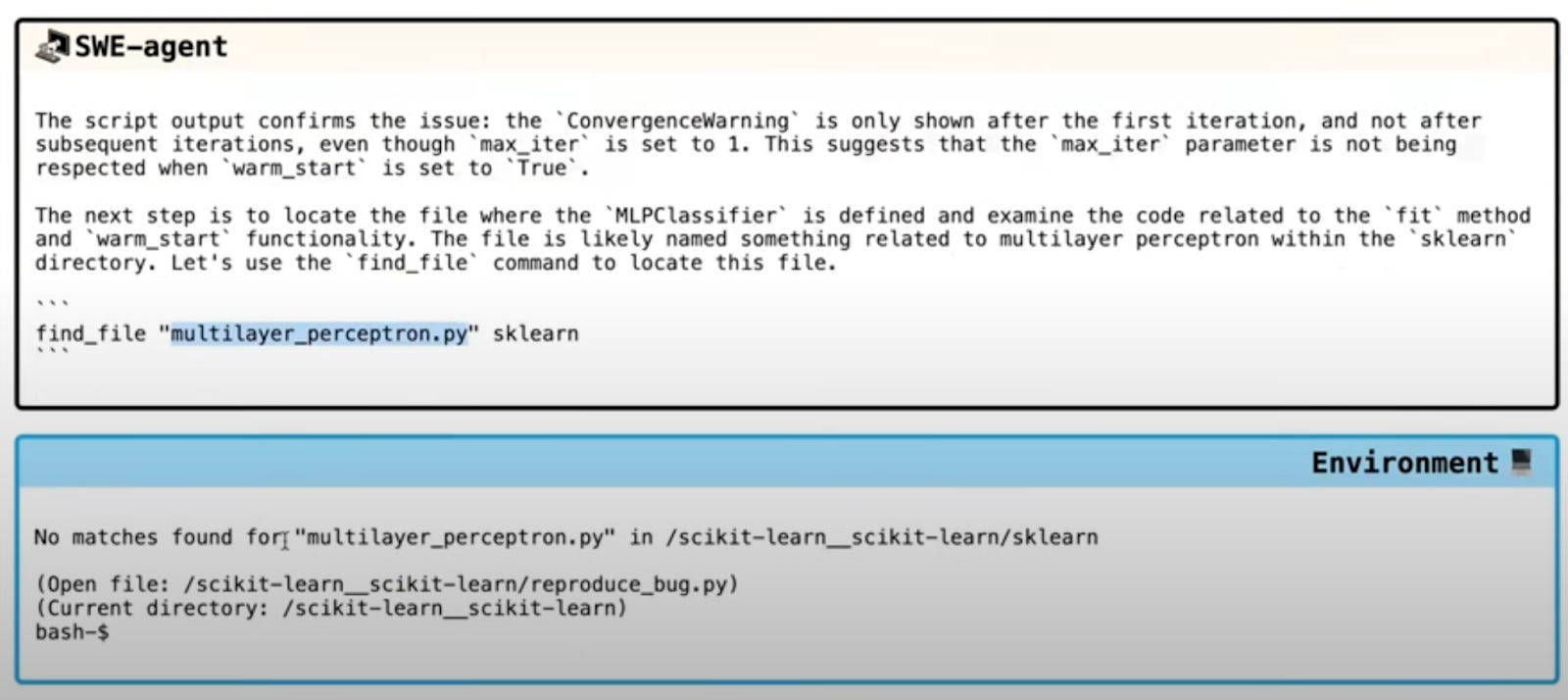

Amusingly, these instructions could be for an inexperienced human dev learning about a new command line environment! 2. How does SWE-agent work?SWE-agent is an implementation of the ACI model. Here’s how it works: 1. Take a GitHub issue, like a bug report or a feature request. The more refined the description, the better. 2. Get to work. The agent kicks off, using the issue as input, generating output for the environment to run, and then repeating it. Note that SWE-agent was built without interaction capabilities at this step, intentionally. However, you can see it would be easy enough for a human developer to pause execution and add more context or instructions. In some way, GitHub Copilot Workspaces provides a more structured and interactive workflow. We previously covered how GH Copilot Workspace works. 3. Submit the solution. The end result could be:

It usually takes the agent about 10 “turns” to reach the point of attempting to submit a solution. Running SWE-agent is surprisingly easy because the team added support for a “one-click deploy,” using GitHub Codespaces. This is a nice touch, and it’s good to see the team making good use of this cloud development environment (CDE.) We previously covered the popularity of CDEs, including GitHub Codespaces. A prerequisite for using SWE-agent is an OpenAI API key, so that the agent can make API requests to use ChatGPT-4-Turbo. Given the tool is open source, it’s easy enough to change these calls to support another API, or even talk with a local LLM. Keep in mind that while SWE-agent is open source, it costs money to use GitHub Codespace and OpenAI APIs, as is common with LLM projects these days. The cost to run a single test is around $2 per GitHub issue. TechnologySWE-agent is written in Python, and this first version provides support for solving issues using it. The team chose this language for practical reasons: the agent was designed to score highly on the SWE-bench benchmark. And most SWE-bench issues are in Python. At the same time, SWE-agent already performs well enough with other languages. The SWE-agent team already proved that adding support for additional languages works well. They ran a test on the HumanEvalFix benchmark, which has a range of problems in multiple languages (Python, JS, Go, Java, C++ and Rust,) that are much more focused on debugging and coding directly, not locating and reproducing an error. Using its current configuration, the agent performed well on Javascript, Java and Python problems. Adding support for new languages requires these steps:

Ofir – a developer of SWE-agent – summarizes:

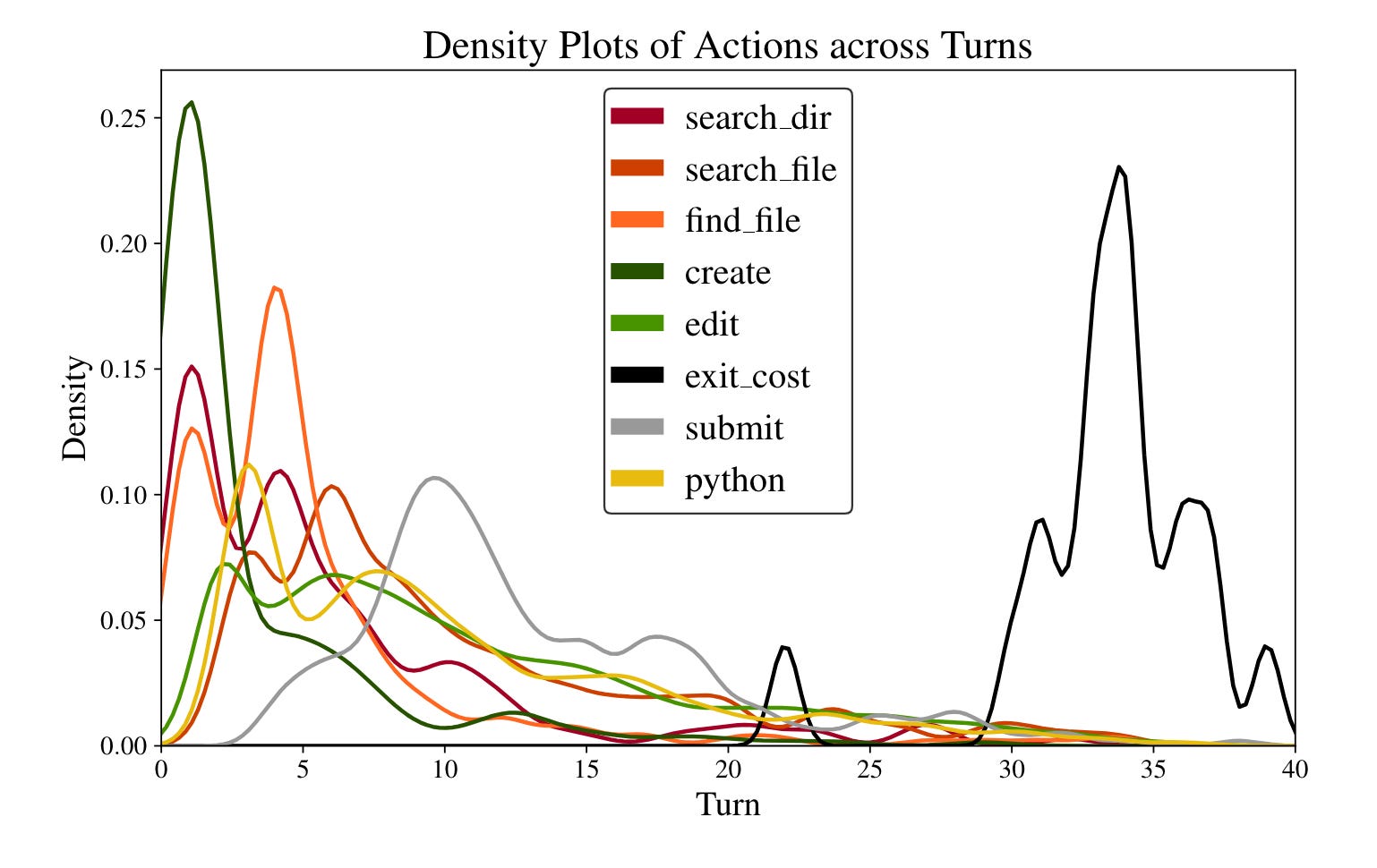

What the agent usually doesIn the SWE-agent paper, the researchers visualized what this tool usually does during each turn, while trying to resolve a GitHub issue:

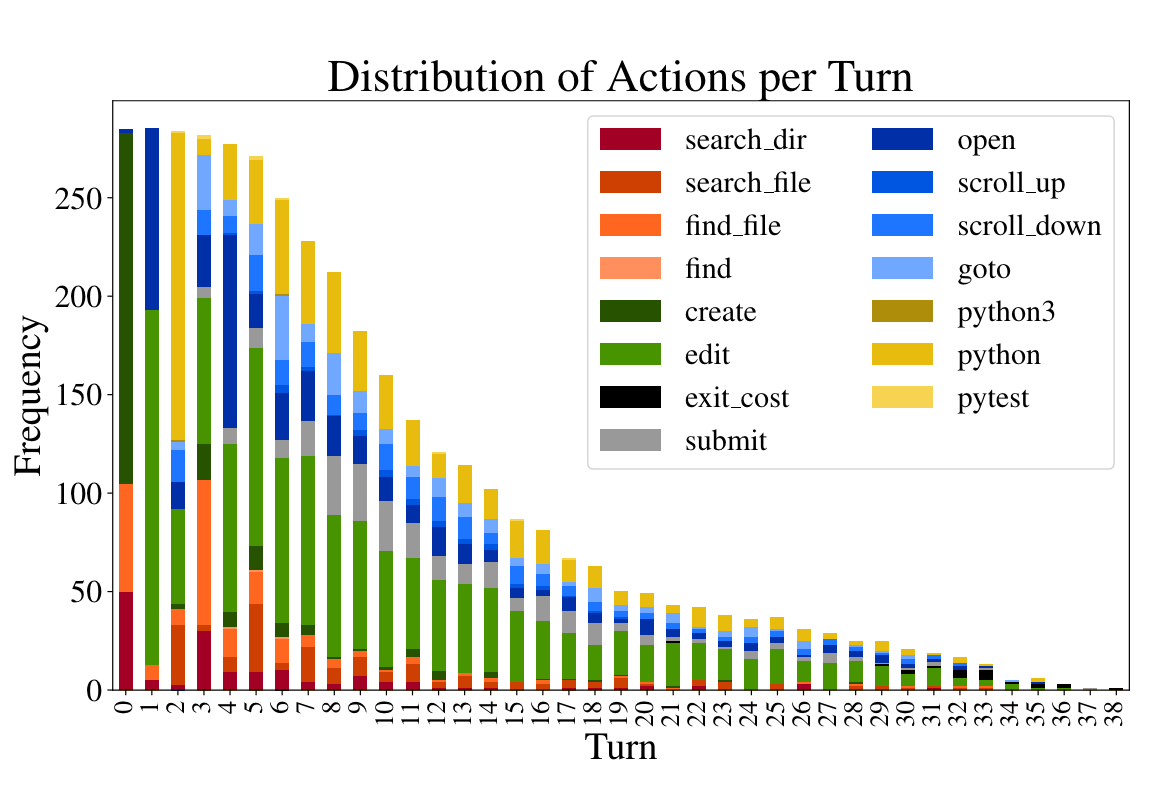

Frequently, the agent created new files, search files, and directories early in the process, and began to edit files and run solutions from the second or third turn. Over time, most runs submitted a solution at around turn 10. Agents that didn’t submit a solution by turn 10 usually kept editing and running the files, until giving up. Looking across all the agent’s actions, it mostly edits open files:

From turn one, the dominant action is for the agent to edit a file, then run Python to check if the change works as expected. The linter makes SWE-agent work a lot better. 51.7% of edits had at least one error, which got caught by the linter, allowing the agent to correct it. This number feels like it could be on-par with how a less experienced engineer would write code. Experienced engineers tend to have good understandings of the language, and if they make errors that result in linting errors, it’s often deliberate. Team behind SWE-agentWith companies raising tens or hundreds of millions of dollars in funding to compete in this field, it’s interesting to look at the small team from within academia that built SWE-agent in 6 months – with only two full-time members:

Led by John Yang and Carlos E. Jimenez, everyone on the team has been active in the machine learning research field for years. And it’s worth noting that only John and Carlos worked full-time on SWE-agent, as everyone had other academic duties. The team started work in October 2023 and published the initial version in April 2024. Building such a useful tool with a part-time academic team is seriously impressive, so congratulations to all for this achievement. A note on SWE-benchThe team started to build SWE-agent after the core of team members had released the SWE-bench evaluation framework in October 2023. The SWE-bench collection is now used as the state-of-the-art LLM coding evaluation framework. We asked Ofir how the idea for this evaluation package came about:

SWE-bench mostly contains GitHub issues that use Python, and it feels like there’s a bias towards issues using the Django framework. We asked Ofir how this Python and Django focus came about:

Open source alternatives to SWE-agentThis article covers SWE-agent, but other open source approaches in the AI space are available. Notable projects with academic backing:

Notable open source projects:

3. Successful runs and failed ones...Subscribe to The Pragmatic Engineer to read the rest.Become a paying subscriber of The Pragmatic Engineer to get access to this post and other subscriber-only content. A subscription gets you:

|

Search thousands of free JavaScript snippets that you can quickly copy and paste into your web pages. Get free JavaScript tutorials, references, code, menus, calendars, popup windows, games, and much more.

How do AI software engineering agents work?

Subscribe to:

Post Comments (Atom)

I Quit AeroMedLab

Watch now (2 mins) | Today is my last day at AeroMedLab ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ...

-

code.gs // 1. Enter sheet name where data is to be written below var SHEET_NAME = "Sheet1" ; // 2. Run > setup // // 3....

No comments:

Post a Comment