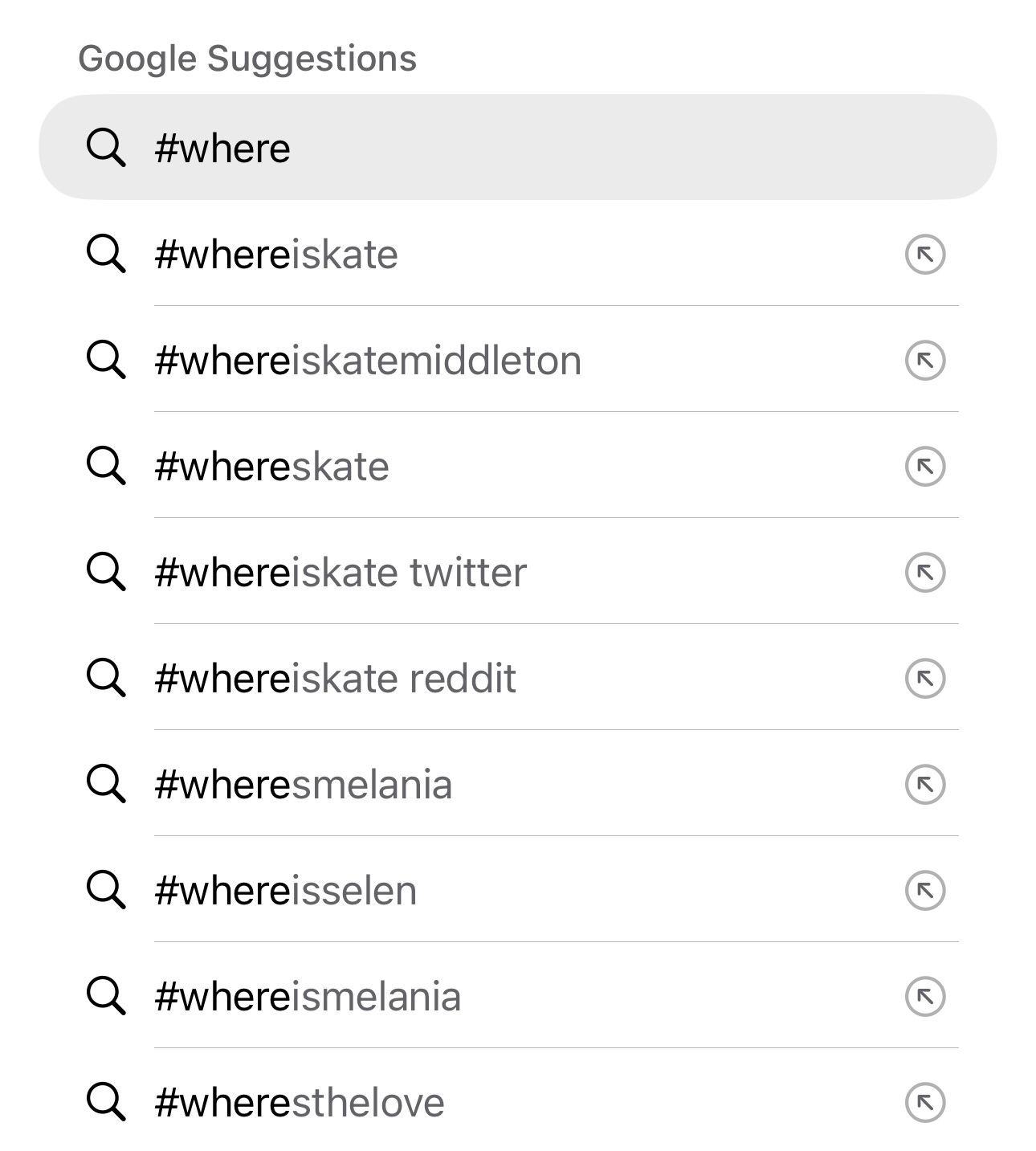

Kate Middleton and the fracturing of realityHow the internet got seduced by the dangerous game of shared myth-making.If you’ve been online anytime in the past few weeks, you’ve probably seen people speculating about the whereabouts of Kate Middleton, who until last week had not been seen publicly since she was admitted to the hospital for abdominal surgery in January. If you’ve been online since Friday, you have undoubtedly seen the unfortunate news that she was diagnosed with cancer and is undergoing chemotherapy, which is why she has been out of the public eye. The news of her cancer diagnosis, delivered by the Princess of Wales herself, provided the answer that so many have been looking for, yet it has done little to put an end to the speculation that began weeks ago when she first went into the hospital. [Updated to add: We’ve seen the same phenomenon play out in the days following the devastating Key Bridge collapse, as social media users have falsely linked the disaster to everything from child sex trafficking to terrorism. Days later, there are still new alternative narratives being assembled on social media and floated on Fox News, and this process will continue for the foreseeable future]. That’s in large part because conspiracy theories — once they start circulating and become adopted by online influencers and amplified by algorithms and media outlets — are nearly impossible to reel back in, even with the resources of the British monarchy behind you. But there’s more to it than that. In our current online information environment, conspiracy theories, rumors, and false narratives offer much more than just the information that comprises them. Among other things, they provide a form of entertainment, a pathway to internet fame, an easy way to make money, and perhaps most importantly, a shared activity that allows users to signal their affiliation with a particular group or stance — a phenomenon that represents a new form of identity expression and a way to enforce group boundaries and build solidarity in online communities, but at a great cost. This latest incident involving Kate Middleton, and the way it was handled, serves as a lesson and a warning about the state of our information environment and just how easy it is to start a fire that can’t be contained. It also provides a startling look at the participatory dynamics involved in modern-day myth-making, and gives us a window into the future of what these dynamics might look like as AI enters the scene and becomes an active participant in the process of fracturing whatever shared reality we have left. If we want to avoid the worst of these possible futures, we first have to understand how we got to the point where we are today — so let’s start by taking a look at the events of the past few weeks and what they can tell us about the challenges ahead. The toxic reality of participatory disinformationKate’s sudden disappearance from the public eye just after Christmas, combined with a lack of communication from the royal family, left the public confused, concerned, and searching for answers where none existed — a perfect storm that created the ideal conditions for conspiracy theories to thrive. In short, the royal family's decision to withhold any and all information about Kate’s whereabouts and well-being created a data void that was then easily manipulated and filled with speculation, rumors, and conspiratorial claims. Data voids describe a phenomenon in the information environment in which little or no information exists online for a given topic, resulting in search queries that return few or no credible, relevant results. This problem can also arise when the supply of credible, discoverable, and relevant information fails to keep up with the demand for information in a given area — a specific type of data void known as a data deficit. Data voids have proven to be easily exploited and manipulated by bad actors because that lack of information means that it is easier to hijack search algorithms and keywords, and therefore to make false and inflammatory information appear prominently in search results. Hence, when people went online to search for information about Kate, the first information they encountered, particularly on social media platforms, included a variety of rumors and conspiracy theories, with very little credible reporting or contextual information to be found. As these rumors started making the rounds, more and more people became curious about the story and went online to see what was going on, which had the effect of further amplifying keywords and hashtags related to Kate, and making the topic trend across most major social media platforms. Once that cycle starts, it is hard to bring it to an end, as trending topics attract even more attention to the story and incentivize online influencers to jump on the bandwagon in order to reap the benefits of algorithmic amplification. That’s the technical explanation for what happened, and it certainly played a significant role in fueling the online rumor mill and amplifying conspiratorial claims to the point that they were covered daily by major newspapers around the world. But the other side of this story involves the psychological and social factors that drive people to engage in participatory disinformation, and the technological systems that incentivize their participation. To be clear, it’s understandable why so many people got swept up in the whirlwind of speculation, and for the most part, people didn’t mean any harm by it. The royal family’s lack of communication and the strange decision to release an edited photo of Kate didn’t exactly help to ease people’s suspicions, either. But as someone who has been the target of similar antisocial group behaviors, I can assure you that this type of phenomenon, even when it may seem justifiable, can inflict significant harm and quickly spiral out of control. Furthermore, while most people joined in the spectacle simply out of curiosity, and perhaps a taste for scandals, those leading the charge were doing so for very different reasons. After all, there is money to be made in myth-making and spreading salacious rumors about celebrities. In fact, there is an entire class of influencers devoted to doing just that — and it is those people who are most responsible for the harm caused by normalizing and celebrating a ritual centered around harassment, dehumanization, invasion of privacy, and mob mentality. Among those who participated in the construction of conspiracy theories and myths about Kate’s health and wellbeing were a variety of internet fandoms that have formed around various people, events, and subcultures, including members of the royal family. For weeks, these groups have put forth a variety of different theories about Kate's whereabouts and the reason for her absence from the spotlight, with speculation ranging from rumors of her husband having an affair or her being hospitalized for an eating disorder, mental breakdown, or botched plastic surgery, all the way to baseless conspiracy theories claiming that she died or was murdered. These claims were not confined to the fringes of social media. Rather, they became headlines in tabloids and newspapers across the world, which gave them an appearance of legitimacy and further fueled the speculation process. As we have witnessed over the past few weeks, people become quite attached to their version of events, even when it’s refuted by new evidence. In fact, a non-trivial percentage of people are not even attached to a particular alternative narrative, but rather they’re attached to the idea that the official narrative is fake. As such, they will share and engage with nearly any alternative theory, with the more salacious storylines drawing the most attention. To better understand this behavior, it’s helpful to look at the research exploring online fandoms and participatory disinformation, which explains how online communities co-create and maintain alternative versions of events that are accepted as reality within the group. When faced with news that contradicts or calls into question the veracity of those narratives, individuals who are part of these online communities will work together to weave the emerging information into the existing conspiracy theories — and if needed, resurrect old conspiracy theories and rumors — in order to provide an alternative explanation of current events, or to attempt to prove that their preferred conspiracy theory was correct after all. Drawing upon selective evidence, cherry-picked data, and speculative reasoning, they construct compelling narratives that feed into people’s preexisting biases and exploit basic human needs like the desire to be part of a group and the desire for recognition — both of which are provided in exchange for participating in the process of crafting and disseminating these alternative narratives. Typically, this process plays out in a gamified manner, with users earning rewards in the form of praise, engagement, new followers, and the dopamine rush that accompanies all of those things in exchange for finding or simply inventing juicy bits of information to add to the narrative. Consider, for example, that when Kate and William were spotted shopping at a farm store early last week — an outing that was captured on video by a passerby — the response from many social media users was to question whether the person in the video was really Kate and suggest that perhaps it was actually a body double. Then, when Kate announced her cancer diagnosis, some users still were not ready to accept that version of events, so instead, they trotted out people claiming to be doctors, who proceeded to pick apart Kate’s story and cast doubt on her cancer diagnosis. Some users are alleging that the video in which she announced her diagnosis is fake, with some claiming that the “obviously fake video” is further proof that she is actually dead. Meanwhile, others tried to use these events to drive a further wedge between the “Kate and William camp”, and the “Meghan and Harry camp” — rekindling an ongoing feud between fandom communities who use details about the lives of the royal family to construct exemplars that are then weaponized and used as ammunition in a broader culture war over issues such as racism, misogyny, online harassment, and content moderation. It is during this process of turning actual people into characters to be used to wage a proxy war over contentious issues that have divided society for decades — in some cases, much longer — that the humanity of those people takes a backseat to the supposedly noble cause for which the internet mob has selected them to become figureheads. Typically, this process plays out in a gamified manner, with users earning rewards in the form of praise, engagement, new followers, and the dopamine rush that accompanies all of those things in exchange for finding or simply inventing juicy bits of information to add to the narrative. Participatory disinformation is, at its core, a social-psychological phenomenon in which individuals who spend the most time and contribute the most to solving the mystery or keeping the (false) narrative going receive significant social recognition and praise, sometimes to the extent that it becomes part of their identity, prompting them to devote even more time to the endeavor, and not only removing the barriers to spreading disinformation, but actively encouraging and rewarding those who do it. In this way, engaging in participatory disinformation also exerts a normative influence that shapes the social norms within online communities, such that individuals do not have to worry about facing any sort of social consequences for spreading disinformation, nor do they feel pressured to adhere to mainstream narratives or standards of fact-checking and evidence. By establishing these new rules of engagement, so to speak, members of online communities that engage in participatory disinformation also create new norms around expertise and gatekeeping, such that individuals no longer need to have any credentials or objective indicators of knowledge or firsthand experience, in order to become an authoritative source on a subject. Many of those who are viewed as authoritative voices in these communities are seen that way simply because of the amount of time they spend on the activity and their ability to craft entertaining, believable, and often intentionally offensive narratives from context-free bits of information that are assembled like puzzle pieces. At a time when authoritarians are engaged in a global effort to undermine democracy by destroying the very notion of objective truth and shared reality, the existence of toxic online communities that are hostile towards objective evidence and corrections can’t be simply written off as fun and games or entertainment. When large swaths of the online public stops believing in the importance of fact-based storytelling, the effects reverberate far beyond their initial context. Furthermore, when people experience harassment for simply trying to share facts or ask questions about a false narrative, those people will eventually learn to stop saying anything at all. At the same time, those spreading falsehoods end up being emboldened by the positive feedback they receive and the feeling of invincibility that often accompanies it. In communities that engage in this type of participatory myth-making, influencers take on a celebrity-like status, enjoying the protection of being shielded from criticism by loyal followers who view any attempt to correct or fact-check them as an attack on their group and/or identity. By tying identity to specific information and narratives, it becomes a lot easier to form insular communities in which outside information that contradicts the preferred narrative is experienced as an attack on the group and the identities of those who belong to it. In this way, participatory disinformation encourages individuals to prioritize the identity-affirming aspects of information over the factual accuracy of that information. In other words, when it comes down to it, if an individual is faced with a piece of information that confirms what they want to believe about themselves, their group, and their place in the world, but that also happens to be untrue, the nature of participatory disinformation provides not only justification, but encouragement to share that information anyway. Fandoms and similar online communities also often involve a sense of identity fusion, where individual identities become intertwined with the collective identity of the group. This fusion plays a major role in motivating people to do things like vehemently defend their group’s chosen narrative and contribute actively to the dissemination of content that aligns with their collective identity, even if it involves disinformation. The same dynamics can also lead individuals to engage in harassment, intimidation, and other types of harmful — and at times criminal — behavior, in what they claim is an attempt to expose the truth by vilifying and attacking anyone who they label as being part of the supposed conspiracy or its cover-up. By turning this process of narrative construction and story-building into an immersive experience, participatory disinformation creates a sort of alternate reality in which false information may feel more true and authentic than reality. Because these online communities are often close-knit and closed networks with high levels of engagement, it is easy for content to go viral within a given network. And because users tend to trust other members of their group more than those on the outside, content shared within the group is perceived as more credible and authentic, even if it includes disinformation. By turning this process of narrative construction and story-building into an immersive experience, participatory disinformation creates a sort of alternate reality in which false information may feel more true and authentic than reality. As such, users who share false content may avoid some of the normal hesitation that often comes along with engaging with disinformation because in the alternate reality in which they are immersed, what they are sharing is widely accepted as authentic and permissible, and therefore doesn’t create friction with reality or norms of honesty and credibility. This is often achieved by crafting narratives about individual people that may align with broader cultural stereotypes and tropes, but that don’t necessarily apply to the person in question. Essentially, people are turned into canvases upon which the worst attributes associated with aspects of their identity are projected, making them a stand-in for all of the negative feelings and beliefs that a group may hold about another group. A telltale sign that this may be happening is when people over-emphasize or exaggerate certain aspects of an individual's identity, such as their race or socioeconomic status, while ignoring or even denying the existence of other aspects of their identity, such as their gender or their identity as a mother or cancer patient. This tactic of over- and under-emphasizing different parts of a person’s identity is often done in an effort to make it easier to vilify that person, while simultaneously suppressing potential feelings of sympathy or empathy that may arise due to shared identity characteristics or experiences. It’s a lot easier for the mob to justify harassment against a rich, elitist white woman than it is to justify the same behavior being directed at a mother who was just diagnosed with cancer, so the goal of these tactics is to get the public to view the target through a lens that strips away their humanity. It’s also worth noting that many of these communities include significant numbers of inauthentic accounts of various types, including automated accounts, groups of accounts run by a single person, and accounts claiming a false identity. In these groups, it is common to see accounts participating in a variety of long-standing inauthentic campaigns targeting the same or similar issues, and it is not uncommon to see these accounts repurposed for use in different disinformation and harassment campaigns. The existence of these accounts in online communities makes those groups appear to be more popular and have more support than they actually do, which can make people more likely to join them or at least less likely to speak out against their toxic behaviors when they see them. The perils of turning reality into a “Choose Your Own Adventure” gameAs I’ve said several times on social media over the past couple of weeks, I really don’t have much interest in the royal family or the drama that surrounds them. However, I do care about the fact that the same tactics used to harass Kate are also used all the time against millions of other women who don’t have the same resources that she does to deal with the onslaught. I also care about how incidents like this shape our collective norms around privacy, civility, and expectations of accessibility. Although Kate is a member of the royal family, and that does bring with it some unique responsibilities to the public, that doesn’t mean she’s obliged to tell the entire world about her private medical problems nor does it justify spreading rumors and attacking her/her family. It’s a slippery slope once we start compromising our moral values to permit harassment in special circumstances; the norms we apply in incidents like this are eventually applied more widely, which is how we got to a place where anyone with a public profile, particularly women, can be viewed as fair game for harassment, ridicule, and abuse for the purpose of entertainment. Similarly, the excuses we make to justify targeting Kate will end up being the excuses offered up when these groups choose their next target, who will almost certainly not be as well positioned as Kate to deal with the onslaught of abuse. (And let’s go ahead and get rid of the laughable notion that online rumors are an effective way of holding the powerful accountable. If you want to spread conspiracy theories, no one can stop you, but at the very least, don’t try to justify it by lying to yourself about the virtue of doing so). There’s one more dynamic here that I think is worth pointing out. What we have seen unfold over the past couple of weeks may not be the first example of this, but it is among the first — and probably the most glaring — incidents in which participatory disinformation has merged with generative AI to produce a phenomenon in which individuals are not only coming together to co-create narratives and engage in collective storytelling, but also taking part in the process of publicly discerning what is real versus what is the product of AI. I am going to dive into this further in an upcoming article, but this process is particularly interesting because it involves some of the same behaviors that we see in participatory disinformation, paired with tactics mimicking digital forensics and OSINT (open source intelligence) — all of which are applied to the task of identifying supposed signs of AI-generated and manipulated media. In some ways, this has been fascinating to see play out, yet in other ways, it has been terrifying to realize that the vast majority of people can’t discern real from fake, and it’s not because they are careless or unintelligent or otherwise lacking in ability, but because AI has become so good at mimicking reality that we have lost the ability to discern where one ends and the other begins. There are, of course, profound implications associated with this, not the least of which is that when individuals can’t tell what is real, they don’t necessarily respond by becoming more discerning consumers of information — rather, many respond by giving up on the idea that an objective reality exists at all, and instead choose to make judgments about what is true based on what they want to be true and what they believe will help them win the battle of narratives. By allowing disinformation, deep fakes, AI-generated media, and other forms of digital deception to permeate our information spaces, we are turning reality into a twisted choose-your-own-adventure game in which people can simply call into question any image or other type of media that doesn’t align with the narrative they want to believe, rather than doing the work of questioning their own beliefs and assumptions. As more and more people lose trust in the information they see online, we should expect this to become the new norm — yet at the same time, we should be extremely careful not to ever accept it as normal. Because it’s not. It’s actually indicative of very serious problems beneath the surface — among them, the decline of democratic values in society. As Timothy Snyder wrote in his book, On Tyranny, “To abandon facts is to abandon freedom. If nothing is true, then no one can criticize power, because there is no basis upon which to do so. If nothing is true, then all is spectacle.” Sounds familiar, no? If we wish to avoid descending further into an authoritarian future, now is the time to establish guidelines and boundaries around the use of generative AI and similar technologies, as well as the use of social media platforms to direct harassment campaigns and wage information war against individuals and their families. Even in the absence of action by policymakers and tech companies, we can still decide on informal rules (i.e., norms) of conduct to discourage things like harassment-for-hire and profiting from the weaponization of individuals’ private lives, while promoting responsible information-sharing and encouraging people not to ignore deceptive, manipulative, or abusive behavior just because it’s coming from their “side” (in reality, people who routinely engage in harmful behaviors that damage our democracy and benefit autocrats are most certainly not on your side, even if they might appear to be based on shared interests or common enemies). Furthermore, we can enforce these norms by disengaging with problematic online communities, unfollowing accounts that repeatedly display abusive and/or misleading behaviors, and showing support for those who become targets of disinformation and/or harassment campaigns. And for those of us who study online deception and hate, it’s up to us to protect the integrity of our work and the reputation of our field if we wish to be viewed as trusted sources by a public that is rapidly losing trust in just about everything. This means not only ensuring that we are putting out solid research and analysis, but also making sure that our tactics and methods are not being co-opted by bad actors, who often try to use claims of “fighting disinformation” or “countering hate” as cover for carrying out personal vendettas, politically-motivated agendas, and profit-driven scams. As AI-generated content becomes more sophisticated, cheaper to produce, and even more ubiquitous in online spaces, it will become easier and easier for powerful people and entities to use these tools to rewrite history and create convincing alternative realities that serve to benefit their own interests at the expense of everyone else. By allowing seemingly trivial events like the Kate Middleton drama to be defined by problematic actors offering up fictional accounts of reality, we are conditioning ourselves to do the same thing even when it involves far more consequential matters. Holding power to account doesn’t mean wildly speculating about the activities of powerful actors like the royal family. Rather, it involves a commitment to truth and transparency to ensure that powerful actors can’t get away with substituting speculation in place of substance or lying to us under the guise of entertainment. Furthermore, the longer we allow ourselves to live in separate realities characterized by competing narratives about the world around us, the harder it becomes to join together in our shared reality to exercise our collective power when it’s really necessary. And the more we cede our online spaces to coordinated disinformation and harassment campaigns, the easier it is to forget that the people being targeted — even when they’re rich and famous — are still human underneath it all.

|

Search thousands of free JavaScript snippets that you can quickly copy and paste into your web pages. Get free JavaScript tutorials, references, code, menus, calendars, popup windows, games, and much more.

Kate Middleton and the fracturing of reality

Subscribe to:

Post Comments (Atom)

When Bad People Make Good Art

I offer six guidelines on cancel culture ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏...

-

code.gs // 1. Enter sheet name where data is to be written below var SHEET_NAME = "Sheet1" ; // 2. Run > setup // // 3....

No comments:

Post a Comment