How AI-assisted coding will change software engineering: hard truthsA field guide that also covers why we need to rethink our expectations, and what software engineering really is. A guest post by software engineer and engineering leader Addy OsmaniHi, this is Gergely with a bonus issue of the Pragmatic Engineer Newsletter. In every issue, we cover topics related to Big Tech and startups through the lens of software engineers and engineering leaders. If you’ve been forwarded this email, you can subscribe here. Happy New Year! As we look toward the innovations that 2025 might bring, it is a sure bet that GenAI will continue to change how we do software engineering. It’s hard to believe that just over two years ago in November of 2022 was ChatGPT’s first release. This was the point when large language models (LLMs) started to get widespread adoption. Even though LLMs are built in a surprisingly simple way, they produce impressive results in a variety of areas. Writing code turns out to be perhaps one of their strongest points. This is not all that surprising, given how:

Last year, we saw that about 75% of developers use some kind of AI tool for software engineering–related work, as per our AI tooling reality check survey. And yet, it feels like we’re still early in the tooling innovation cycle, and more complex approaches like AI software engineering agents are likely to be the center of innovation in 2025. Mainstream media has been painting an increasingly dramatic picture of the software engineering industry. In March, Business Insider wrote about how “Software engineers are getting closer to finding out if AI really can make them jobless”, and in September, Forbes asked: “Are software engineers becoming obsolete?” While such articles get wide reach, they are coming from people who are not software engineers themselves, don’t use these AI tools, and are unaware of the efficiency (and limitations!) of these new GenAI coding tools. But what can we realistically expect from GenAI tools for shaping software engineering? GenAI will change parts of software engineering, but it is unlikely to do so in the dramatic way that some previous headlines suggest. And with two years of using these tools, and with most engineering teams using them for 12 months or more, we can shape a better opinion of them. Addy Osmani is a software engineer and engineering leader, in a good position to observe how GenAI tools are really shaping software engineering. He’s been working at Google for 12 years and is currently the Head of Chrome Developer Experience. Google is a company at the forefront of GenAI innovation. The company authored the research paper on the Transformers architecture in 2017 that serves as the foundation for LLMs. Today, Google has built one of the most advanced foundational models with Gemini 2.0 and is one of the biggest OpenAI competitors. Addy summarized his observations and predictions in the article The 70% problem: Hard truths about AI-assisted coding. It’s a grounded take on the strengths and weaknesses of AI tooling, one that highlights fundamental limitations of these tools, as well as the positives that are too good to not adopt as an engineer. It also offers practical advice for software engineers from junior to senior on how to make the most out of these tools. With Addy’s permission, this is an edited version of his article, re-published, with more of my thoughts added at the end. This issue covers:

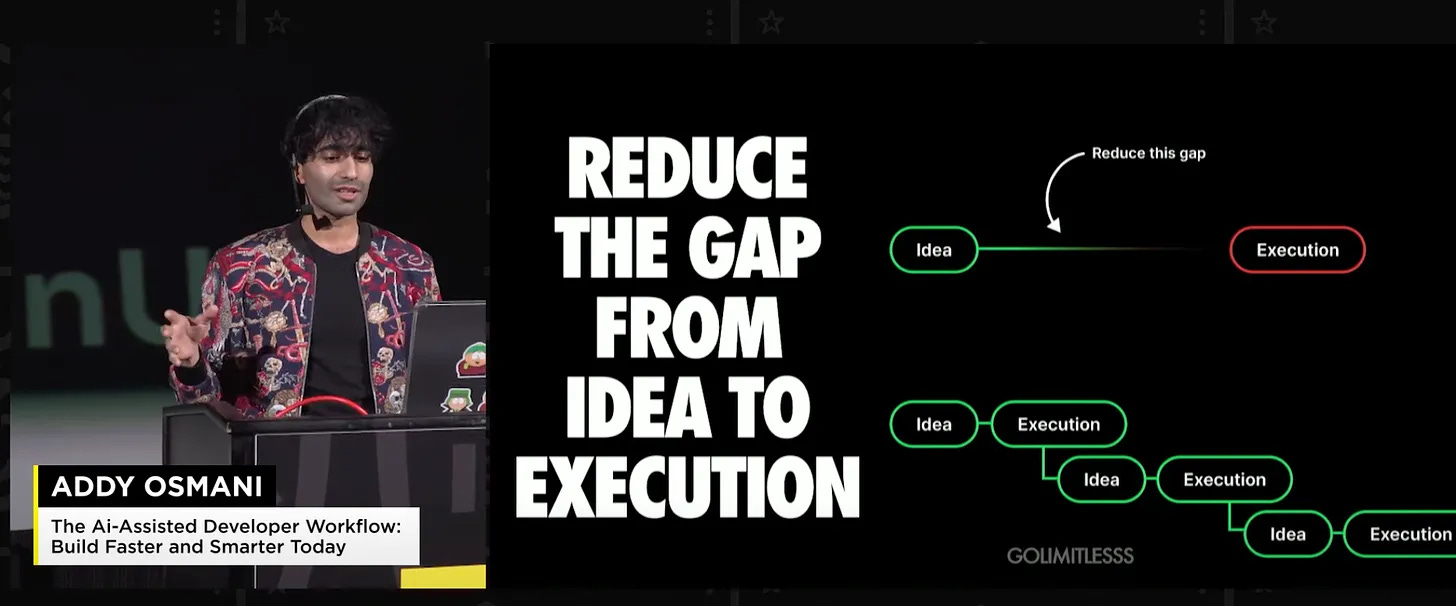

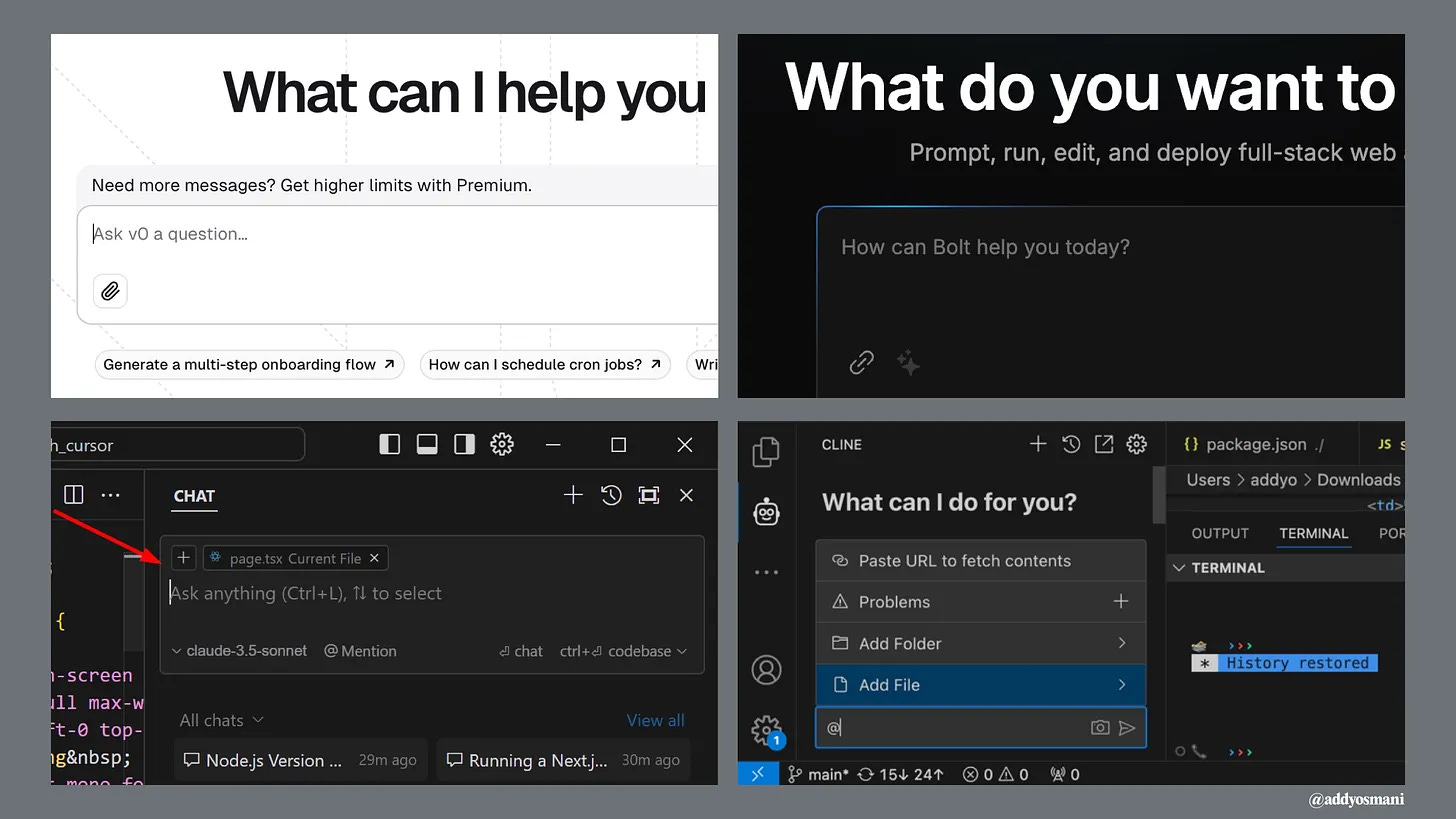

Addy’s name might ring familiar to many of you. In August, we published an excerpt from his new book, Leading Effective Teams. Addy also writes a newsletter called Elevate: subscribe to Elevate to get Addy’s posts in your inbox. With this, it’s over to Addy: The bottom of this article could be cut off in some email clients. Read the full article uninterrupted, online. After spending the last few years embedded in AI-assisted development, I've noticed a fascinating pattern. While engineers report being dramatically more productive with AI, the actual software we use daily doesn’t seem like it’s getting noticeably better. What's going on here? I think I know why, and the answer reveals some fundamental truths about software development that we need to reckon with. Let me share what I've learned. I've observed two distinct patterns in how teams are leveraging AI for development. Let's call them the "bootstrappers" and the "iterators." Both are helping engineers (and even non-technical users) reduce the gap from idea to execution (or MVP). 1. How developers are actually using AIThe Bootstrappers: Zero to MVPTools like Bolt, v0, and screenshot-to-code AI are revolutionizing how we bootstrap new projects. These teams typically:

The results can be impressive. I recently watched a solo developer use Bolt to turn a Figma design into a working web app in next to no time. It wasn't production-ready, but it was good enough to get very initial user feedback. The Iterators: daily developmentThe second camp uses tools like Cursor, Cline, Copilot, and WindSurf for their daily development workflow. This is less flashy but potentially more transformative. These developers are:

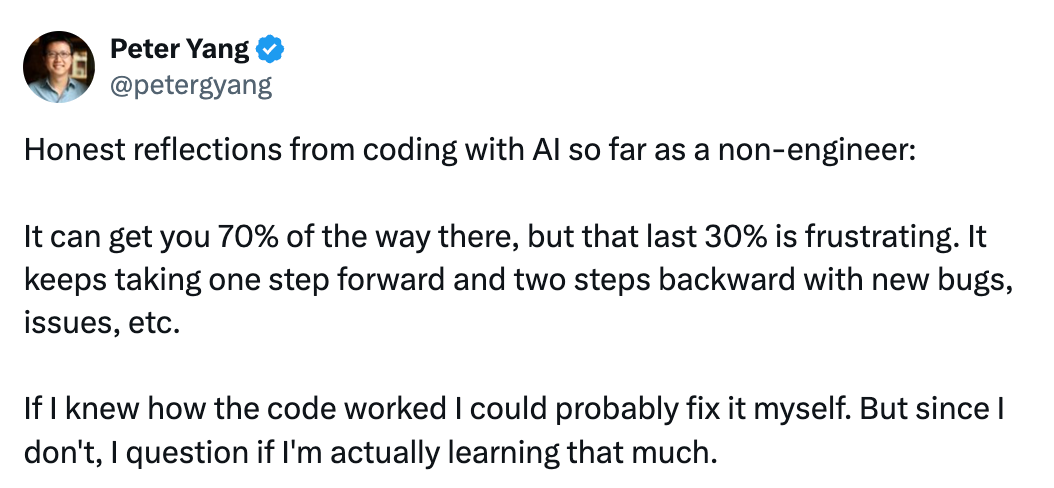

But here's the catch: while both approaches can dramatically accelerate development, they come with hidden costs that aren't immediately obvious. 2. The 70% problem: AI's learning curve paradoxA tweet that recently caught my eye perfectly captures what I've been observing in the field: Non-engineers using AI for coding find themselves hitting a frustrating wall. They can get 70% of the way there surprisingly quickly, but that final 30% becomes an exercise in diminishing returns.

This "70% problem" reveals something crucial about the current state of AI-assisted development. The initial progress feels magical: you can describe what you want, and AI tools like v0 or Bolt will generate a working prototype that looks impressive. But then reality sets in. The two steps back patternWhat typically happens next follows a predictable pattern:

This cycle is particularly painful for non-engineers because they lack the mental models to understand what's actually going wrong. When an experienced developer encounters a bug, they can reason about potential causes and solutions based on years of pattern recognition. Without this background, you're essentially playing whack-a-mole with code you don't fully understand. The hidden cost of "AI Speed"When you watch a senior engineer work with AI tools like Cursor or Copilot, it looks like magic. They can scaffold entire features in minutes, complete with tests and documentation. But watch carefully, and you'll notice something crucial: They're not just accepting what the AI suggests. They're constantly:

In other words, they're applying years of hard-won engineering wisdom to shape and constrain the AI's output. The AI is accelerating implementation, but their expertise is what keeps the code maintainable. Junior engineers often miss these crucial steps. They accept the AI's output more readily, leading to what I call "house of cards code" – it looks complete but collapses under real-world pressure. A knowledge gapThe most successful non-engineers I've seen using AI coding tools take a hybrid approach:

But this requires patience and dedication, which is exactly the opposite of what many people hope to achieve by using AI tools in the first place. The knowledge paradoxHere's the most counterintuitive thing I've discovered: AI tools help experienced developers more than beginners. This seems backward. Shouldn't AI democratize coding? The reality is that AI is like having a very eager junior developer on your team. They can write code quickly, but they need constant supervision and correction. The more you know, the better you can guide them. This creates what I call the "knowledge paradox":

I've watched senior engineers use AI to:

Meanwhile, juniors often:

There's a deeper issue here: The very thing that makes AI coding tools accessible to non-engineers, their ability to handle complexity on your behalf, can actually impede learning. When code just "appears" without you understanding the underlying principles:

This creates a dependency where you need to keep going back to AI to fix issues, rather than developing the expertise to handle them yourself. Implications for the futureThis "70% problem" suggests that current AI coding tools are best viewed as:

But they're not yet the coding democratization solution many hoped for. The final 30%, the part that makes software production-ready, maintainable, and robust, still requires real engineering knowledge. The good news? This gap will likely narrow as tools improve. But for now, the most pragmatic approach is to use AI to accelerate learning, not replace it entirely. 3. What actually works: practical patternsAfter observing dozens of teams, here's what I've seen work consistently: "AI first draft" patternLet AI generate a basic implementation

"Constant conversation" patternStart new AI chats for each distinct task

"Trust but verify" pattern

4. What does this mean for developers?Despite these challenges, I'm optimistic about AI's role in software development. The key is understanding what it's really good for:

If you're just starting with AI-assisted development, here's my advice: Start small

Stay modular

Trust your experience

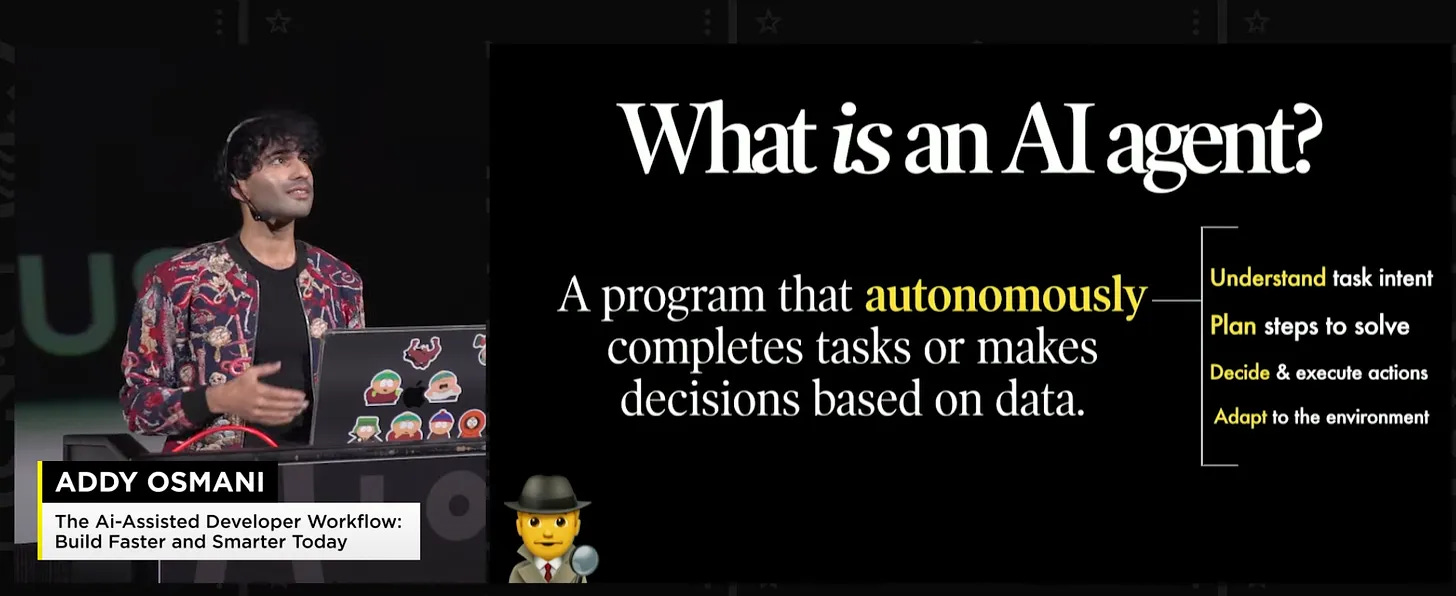

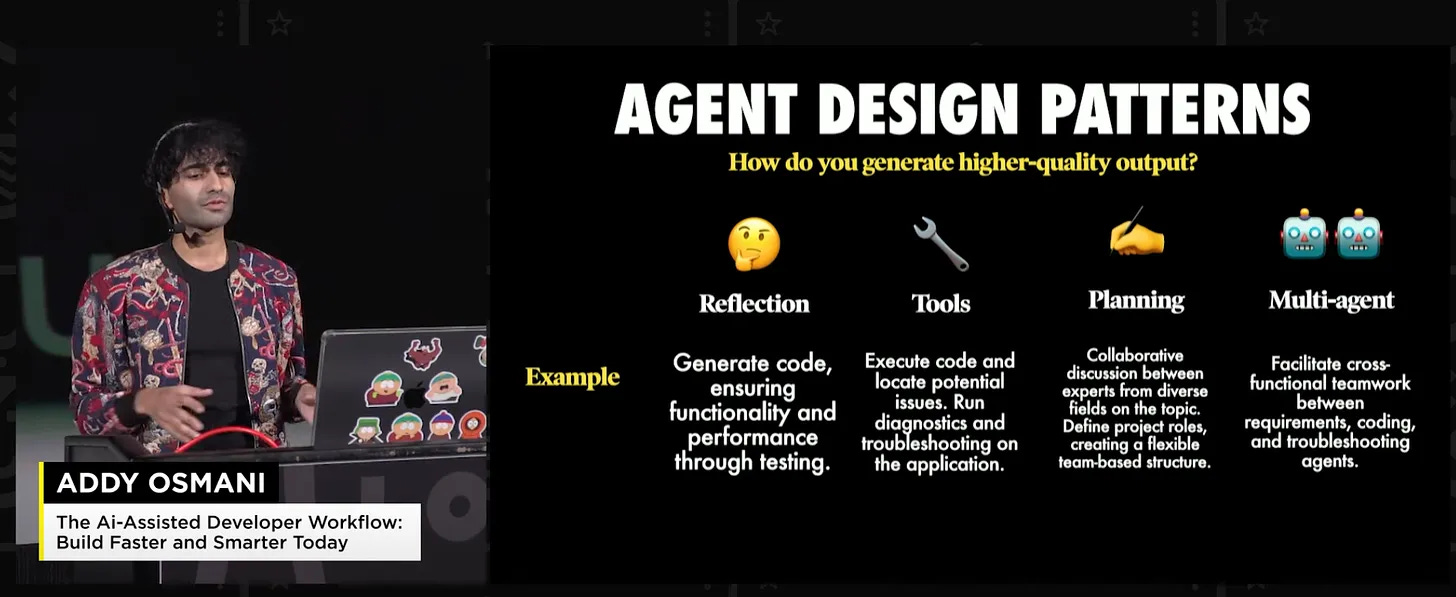

5. The rise of agentic software engineeringThe landscape of AI-assisted development is shifting dramatically as we head into 2025. While the current tools have already changed how we prototype and iterate, I believe we're on the cusp of an even more significant transformation: the rise of agentic software engineering. What do I mean by "agentic"? Instead of just responding to prompts, these systems can plan, execute, and iterate on solutions with increasing autonomy.  If you’re interested in learning more about agents, including my take on Cursor/Cline/v0/Bolt, you may be interested in my recent JSNation talk above. We're already seeing early signs of this evolution: From responders to collaboratorsCurrent tools mostly wait for our commands. But look at newer features like Anthropic's computer use in Claude, or Cline's ability to automatically launch browsers and run tests. These aren't just glorified autocomplete. They're actually understanding tasks and taking the initiative to solve problems. Think about debugging: Instead of just suggesting fixes, these agents can:

The Multimodal futureThe next generation of tools may do more than just work with code. They could seamlessly integrate:

This multimodal capability means they can understand and work with software the way humans do: holistically, not just at the code level. Autonomous but guidedThe key insight I've gained from working with these tools is that the future isn't about AI replacing developers. It's about AI becoming an increasingly capable collaborator that can take initiative while still respecting human guidance and expertise. The most effective teams in 2025 may be those that learn to:

The English-first development environmentAs Andrej Karpathy noted: "The hottest new programming language is English." This is a fundamental shift in how we'll interact with development tools. The ability to think clearly and communicate precisely in natural language is becoming as important as traditional coding skills. This shift toward agentic development will require us to evolve our skills:

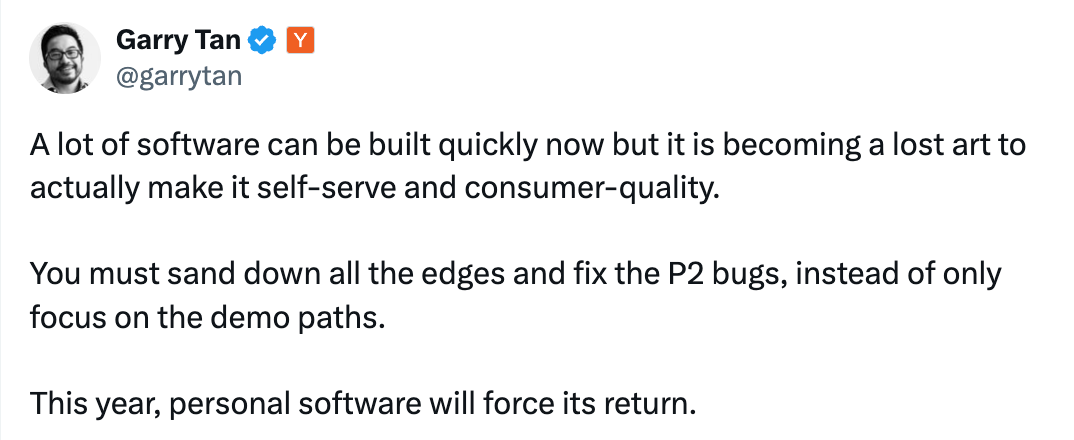

6. The return of software as craft?While AI has made it easier than ever to build software quickly, we're at risk of losing something crucial: the art of creating truly polished, consumer-quality experiences.

The demo-quality trapIt's becoming a pattern: Teams use AI to rapidly build impressive demos. The happy path works beautifully. Investors and social networks are wowed. But when real users start clicking around? That's when things fall apart. I've seen this firsthand:

These aren't just P2 bugs. They're the difference between software people tolerate and software people love. The lost art of polishCreating truly self-serve software, the kind where users never need to contact support, requires a different mindset:

This kind of attention to detail (perhaps) can't be AI-generated. It comes from empathy, experience, and deep care about craft. The renaissance of personal softwareI believe we're going to see a renaissance of personal software development. As the market gets flooded with AI-generated MVPs, the products that will stand out are those built by developers who:

The irony? AI tools might actually enable this renaissance. By handling the routine coding tasks, they free up developers to focus on what matters most: creating software that truly serves and delights users. The bottom lineAI isn't making our software dramatically better because software quality was (perhaps) never primarily limited by coding speed. The hard parts of software development — understanding requirements, designing maintainable systems, handling edge cases, ensuring security and performance — still require human judgment. What AI does do is let us iterate and experiment faster, potentially leading to better solutions through more rapid exploration. But this will only happen if we maintain our engineering discipline and use AI as a tool, not as a replacement for good software practices. Remember: The goal isn't to write more code faster. It's to build better software. Used wisely, AI can help us do that. But it's still up to us to know what "better" means and how to get it. Additional thoughtsGergely again. Thank you, Addy, for this pragmatic summary on how to rethink our expectations on AI and software engineering. If you enjoyed this piece from Addy, check out his other articles and his latest book: Leading Effective Engineering Teams. Here are my additional thoughts on AI and software engineering. A good time to refresh what software engineering really isMuch of the disclosure on AI tooling for software engineering focuses on code generation capabilities, and rightfully so. AI tools are impressive in generating working code from prompts, or suggesting inline code as you build software. But how much of the process of building software is coding itself? About 50 years ago, Fred Brooks thought that it is around 15-20% of all time spent. Here are Brooks’ thoughts from The Mythical Man-Month:

My take is that today, software engineers probably spend their time like this:

At the same time, building standout software has a lot of other parts:

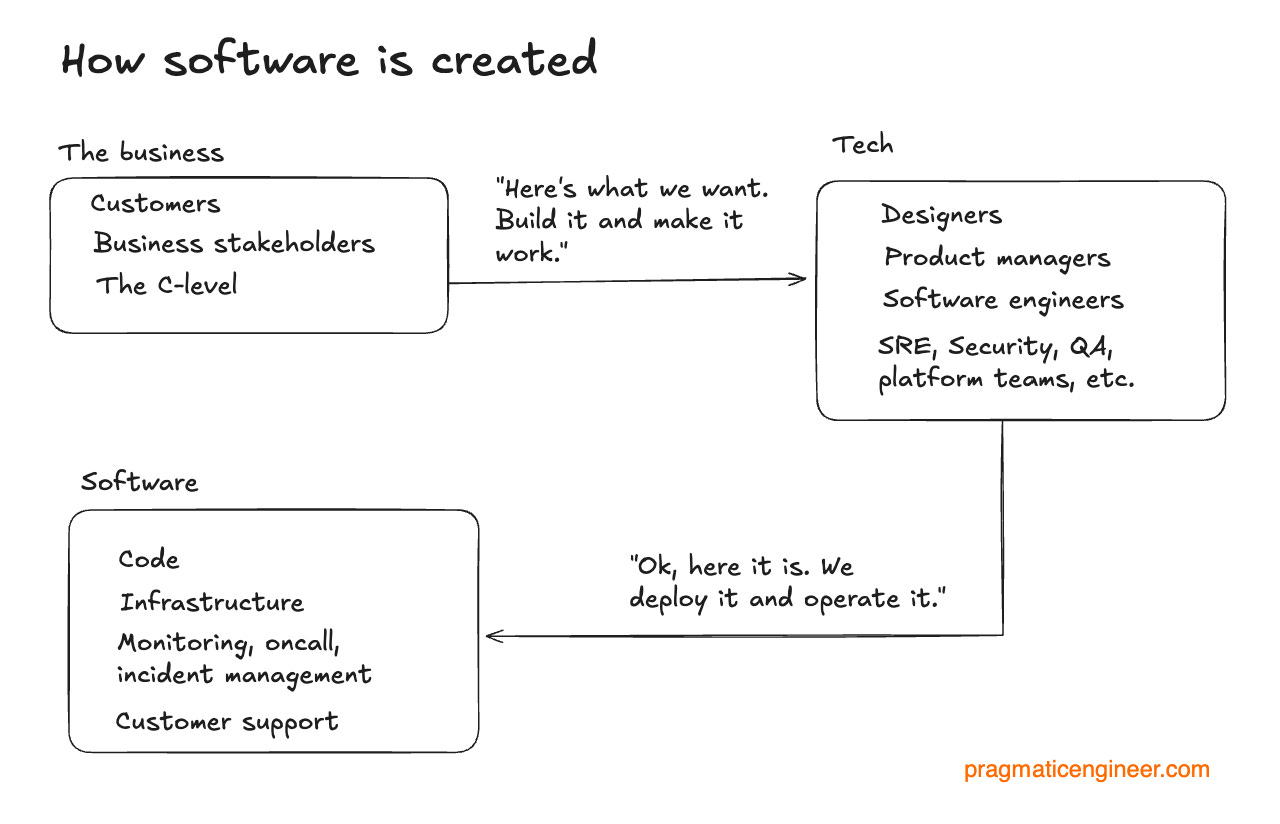

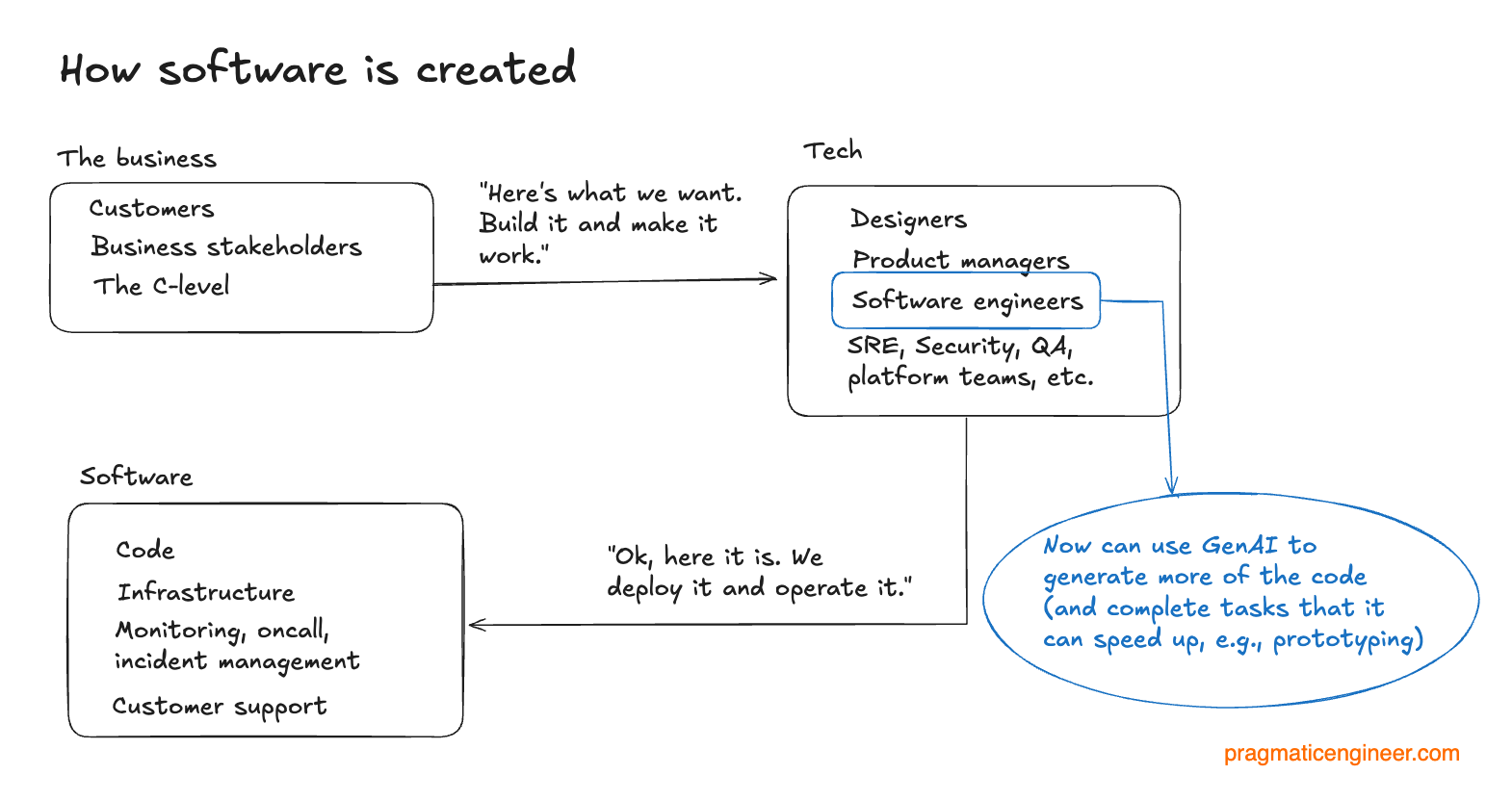

AI tools today can help a lot with the “Build” part. But here is a good question: Just how useful are they for the other 7 things that are also part of software engineering? Needing no developers: the dream since the 1960sNon-technical people creating working software without needing to rely on software developers has been the dream since the 1960s. Coding is about translating from what people want (the customers, business stakeholders, the product manager, and so on) to what the computer understands. LLMs offer us a higher level of abstraction where we can turn English into code. However, this new abstraction does not change the nature of how software is created, – and what software is, – which is this: GenAI tools don’t change the process, but they do make some of the coding parts more efficient: Throughout the history of technology, new innovations promised the ability for business folks to collapse or bypass the “tech” part, and get straight to working software from their high-level prompts. This was the aspiration of:

Unsurprisingly, several GenAI coding startups aspire for the same goal: to allow anyone to create software, by using the English language. In the past, we have seen success for simpler use cases. For example, these days, there is no coding knowledge needed to create a website: non-technical people can use visual editors and services like Wix.com, Webflow, Ghost or WordPress. The higher-level the abstraction, the harder it is to specify how exactly the software should work. No-code solutions already ran into this exact limitation. As advisory CTO Alex Hudson writes in his article The no-code delusion:

For more complex software, it’s hard to see not needing software engineers taking part in planning, building and maintaining software. And the more GenAI lowers the barrier for non-technical people to create software, the more software there will be to maintain. AI agents: a major promise, but also a big “unknown” for 2025Two years after the launch of LLMs, many of us have gotten a pretty good handle on how to use them to augment our coding and software engineering work. They are great for prototyping, switching to less-familiar languages, and tasks where you can verify their correctness, and call out hallucinations or incorrect output. AI agents, on the other hand, are in their infancy. Most of us have not used them extensively. There is only one generally available agent, Devin, at $500/month, and early responses are mixed. A lot of venture funding will be pouring into this area. We’ll see more AI coding agent tools launch, and the price point will also surely drop as a result. GitHub Copilot is likely to make something like Copilot Workspace (an agentic approach) generally available in 2025. And we’ll probably see products from startups like what Stripe’s former CTO, David Singleton founded (/dev/agents.) AI agents trade off latency and cost (much longer time spent computing results and running prompts several times, paraphrased by these startups as “thinking”) for accuracy (better results, based on the prompts). There are some good questions about how much accuracy will improve with this latency+cost tradeoff, and what engineering use cases will see significant productivity boost as a result. Demand for experienced software engineers could increaseExperienced software engineers could be in more demand in the future than they are today. The common theme we’re seeing with AI tooling is how senior-and-above engineers can use these tools more efficiently, as they can “aim” better with them. When you know what “great output” looks like, you can prompt better, stop code generation when it’s getting things wrong, and you can know when to stop prompting and go straight to the source code to fix the code itself. We will see a lot more code produced with the help of these AI tools, and a lot more people and businesses start building their own solutions. As these solutions hit a level of complexity, it’s a safe bet that many of them will need to bring in professionals as they attempt to tame the complexity: complexity that requires experienced engineers to deal with. Existing tech companies will almost certainly produce more code with AI tools: and they will rely on experienced engineers to deal with the increase of complexity that necessarily follows. As a software engineer, mastering AI-assisted development will make you more productive, and also more valuable. It’s an exciting time to be working in this field: we’re living through a time of accelerated tooling innovation. It does take time to figure out how to “tame” the current tools in a way that makes you the most productive: so experiment with them! I hope you’ve found the practical approaches from Addy helpful. For additional pointers, see the issue AI Tooling for Software Engineers in 2024: Reality Check. You’re on the free list for The Pragmatic Engineer. For the full experience, become a paying subscriber. Many readers expense this newsletter within their company’s training/learning/development budget. This post is public, so feel free to share and forward it. If you enjoyed this post, you might enjoy my book, The Software Engineer's Guidebook. Here is what Tanya Reilly, senior principal engineer and author of The Staff Engineer's Path said about it:

|

Search thousands of free JavaScript snippets that you can quickly copy and paste into your web pages. Get free JavaScript tutorials, references, code, menus, calendars, popup windows, games, and much more.

How AI-assisted coding will change software engineering: hard truths

Subscribe to:

Post Comments (Atom)

I Share Private Journal Entries

Random jottings on music (and other topics) from my notebook ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ...

-

code.gs // 1. Enter sheet name where data is to be written below var SHEET_NAME = "Sheet1" ; // 2. Run > setup // // 3....

No comments:

Post a Comment